JEDEC finalizes DDR5 standard: Terabyte-sized DDR5-6400 modules incoming

DDR5 quadruples RAM capacity and doubles speed

JEDEC has published the final JESD79-5 DDR5 memory standard that addresses DRAM requirements of client and server systems that will serve in the coming years. The new type of memory significantly increases performance, improves efficiency, quadruples maximum memory capacity per device, lowers power consumption, and improves reliability.

“With several new performance, reliability and power saving modes implemented in its design, DDR5 is ready to support and enable next-generation technologies,” said Desi Rhoden, Chairman JC-42 Memory Committee and Executive VP Montage Technology.

“The tremendous dedication and effort on the part of more than 150 JEDEC member companies worldwide has resulted in a standard that addresses all aspects of the industry, including system requirements, manufacturing processes, circuit design, and simulation tools and test, greatly enhancing developers’ abilities to innovate and advance a wide range of technological applications.”

- Best RAM: The top memory for your PC

- The best cheap RAM prices and deals

- How to install RAM: It's not as simple as downloading more RAM

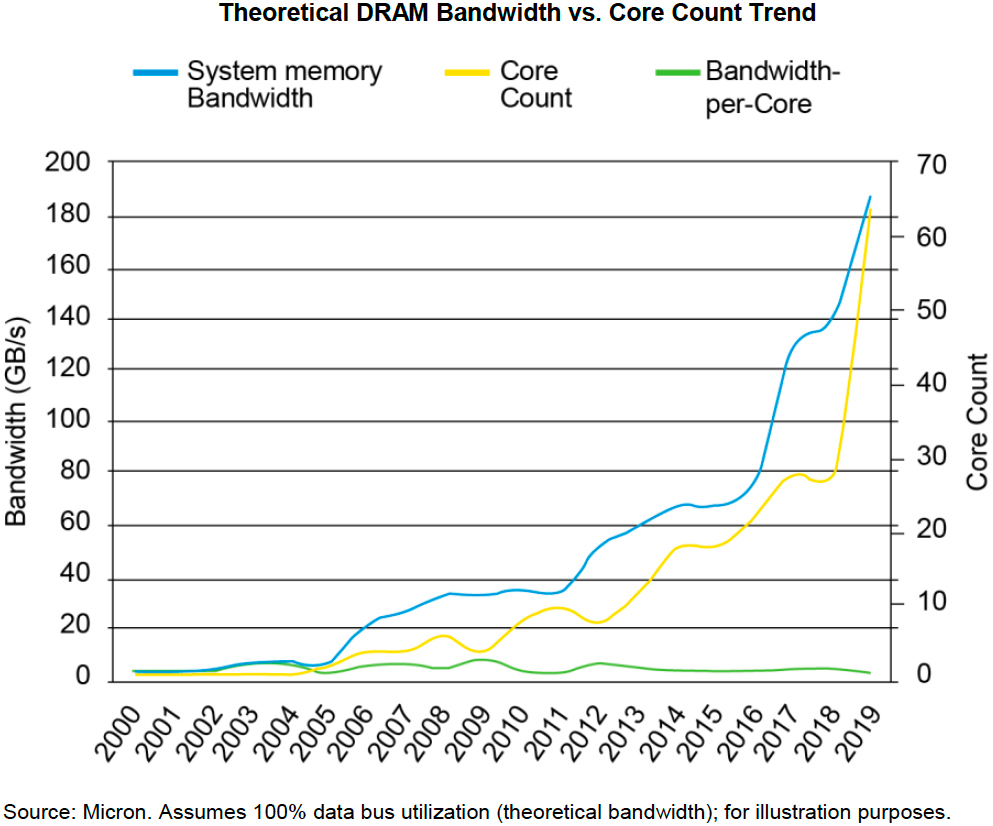

The increasing number of CPU cores calls for a higher system memory bandwidth (to maintain the per-core bandwidth at least at the current level) as well as a higher memory capacity.

Modern memory controllers can support up to two DRAM modules per channel because of signal integrity limitations (without using LRDIMMs or other buffered solutions), so DIMM capacity is what defines per-box memory capacity today. Meanwhile, as servers can now employ from 16 to 48 memory modules, DRAM becomes a tangible contributor to the overall power consumption, so lowering the latter is a must for new memory standards.

“High-performance computing requires memory that can keep pace with the ever-increasing demands of today’s processors,” said Joe Macri, AMD Compute and Graphics CTO “With the publication of the DDR5 standard, AMD can better design its products to meet the future demands of our customers and end-users.”

Quadrupling capacity

The DDR4 specification defines memory devices featuring up to 16 Gb capacity, which limits capacity of server-grade octal-ranked RDIMMs that use 4-Hi 16 Gb stacks to 256 GB. Due to prohibitively high cost and predictably low performance, no module maker produces 512 GB DDR4 RDIMMs today, but 256 GB modules have been available for a while. The new DDR5 standard brings support for 24 Gb, 32 Gb, and 64 Gb DRAMs, which will eventually enable manufacturers to build server memory modules featuring enormous sizes using 16-Hi 32 Gb stacks or 8-Hi 64 Gb stacks (think 2 TB) in the distant future.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Client PCs will also take advantage of the new standard as sleeker notebooks will be able to accommodate more DRAM because of higher per-device capacity (assuming that high-capacity DRAMs are supported by client CPUs). On the module side of things we are going to see 48 GB and 64 GB single-sided/single-rank DIMMs for desktops and laptops in the coming years.

Doubling data rates

“DDR5, developed with significant effort across the industry, marks a great leap forward in memory capability, for the first time delivering a 50% bandwidth jump at the onset of a new technology to meet the demands of AI and high-performance compute,” said Carolyn Duran, VP - Data Platforms Group, GM – Memory and IO Technologies at Intel.

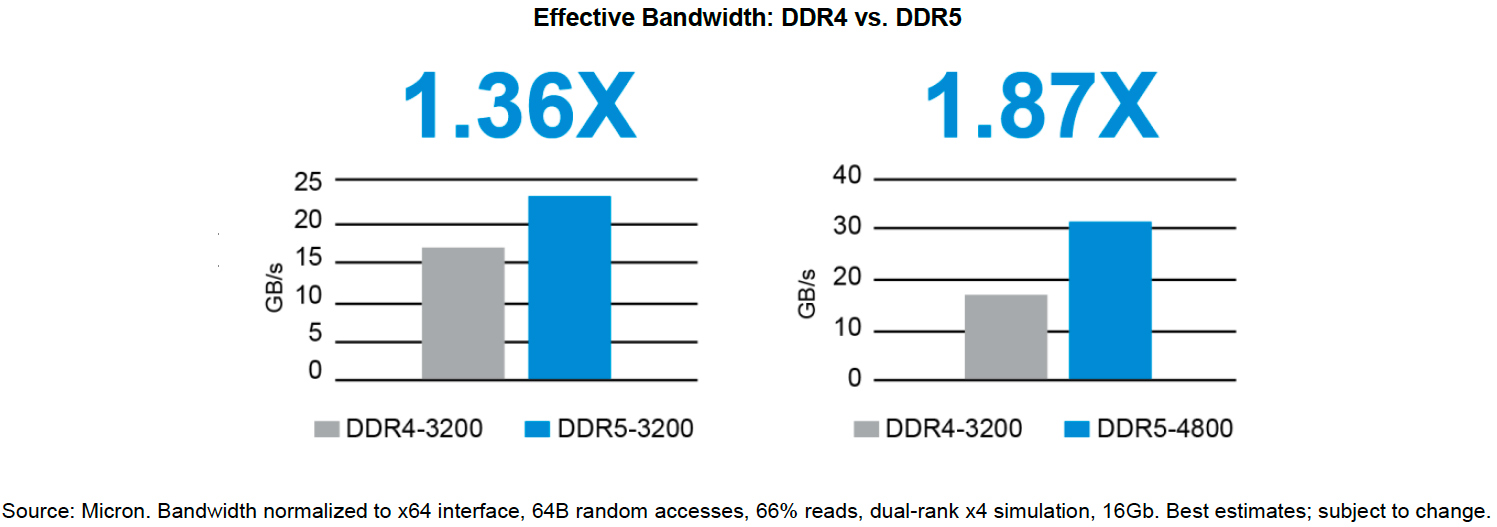

In addition to quadrupling maximum per-device capacity, DDR5 brings substantial performance improvements. Data transfer speeds supported by the new standard start at 4.8 Gbps and will officially scale all the way to 6.4 Gbps. Meanwhile, SK Hynix has already indicated plans to increase DDR5’s I/O speeds to 8.4 Gbps per pin eventually, which shows a great potential the new standard holds for overclockers.

In a bid to support rather extreme data transfer rates and enable longer-term scalability, developers of the specification implemented a number of innovations, such as on-die termination, DFE (decision feedback equalizing) to eliminates reflective noise at high I/O speeds, and new and improved training modes.

Improving efficiency

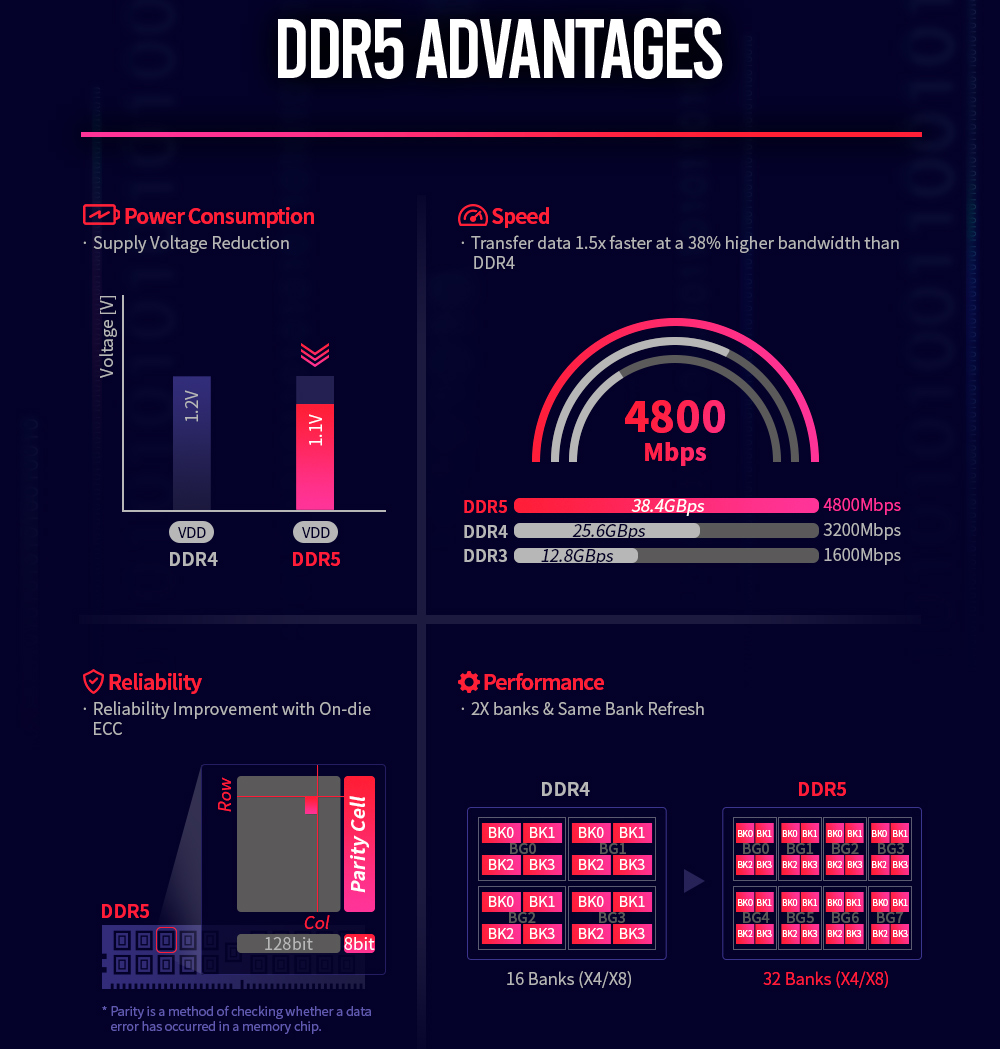

To increase efficiency of memory reads, DDR5 enhances the burst length to 16 bytes for systems with a 64-byte cache line and optionally to 32 bytes for machines featuring a 128-byte cache line. To further improve performance, DDR5 brings better refresh schemes as well as an increased bank group count with the same number of banks per group.

While DDR5 inherits a 288-pin module form-factor from DDR4, the architecture of the DIMM changes drastically (just like pinout). Each DDR5 module has two fully-independent 32/40-bit channels (without/or with ECC) with their own 7-bit Address (Add)/Command (Cmd) as well as data buses. Typically, ‘narrower’ channels tend to offer a better utilization, so two independent channels per module will likely increase real-world bandwidth provided by the module.

According to Micron, DDR5 can offer up to 36% higher effective memory bandwidth compared to DDR4 even at the same I/O speed because of architectural improvements.

Cutting power consumption

DRAM power consumption is important both for clients and servers, so DDR5 lowers memory supply voltage to 1.1 V with an allowable fluctuation range of 3% (i.e., at ±0.033V) and introduces additional power saving modes. Because of a very allowed low fluctuation rate, all DDR5 modules — both for clients and servers — will come with their own voltage regulating modules and power management ICs to ensure clean power, which will slightly increase their costs. For regular DIMMs, that increase may be noticeable, but for people who buy terabytes of DRAM per socket in the server space, the additional costs of VRMs and PMICs will hardly matter at all.

In general, on-module VRMs and PMICs will simplify motherboards design, which will be a good thing for platforms featuring dozens of memory slots. Meanwhile, it will be interesting to see how makers of enthusiast-grade memory modules differentiate themselves with superior voltage regulators and higher I/O speeds.

Improving yields and RAS

Building memory devices featuring a 16GB or a 64GB capacity with high clocks and data transfer rates of up to 6.4 Gbps per pin is tricky for DRAM makers. To improve yields and overall stability, DDR5 introduces an automatic on-die single-bit error correction (SEC) that fixes possible errors before outputting data to the controller. On the system level, DDR5 continues to support Hamming codes-based ECC to detect double-bit errors.

In addition, DDR5 brings support for error check and scrub (ECS) function that enables to detect possible DRAM failures early and avoid or reduce downtime. ECS addresses demands of hyperscale customers running tens of thousands of servers and desperately want to lower their maintenance costs and reduce downtime.

Coming in 2021-2022

Traditionally, JEDEC publishes new DRAM standards well ahead of their commercial fruition. As we know from unofficial sources, AMD, IBM, and Intel are all working on DDR5-supporting platforms for servers that will be available starting from late 2021 and onwards. Meanwhile, JEDEC says that DDR5-supporting client devices will hit the market after server platforms.

To speed up design, development and qualification of next-generation DDR5-based platforms, Micron today launched its DR5 Technology Enablement Program together with Cadence, Montage, Rambus, Renesas, and Synopsys. Qualified participants of the program will be able to get DDR5 devices and modules, module components (DDR5 RCD, DDR5 DB, DDR5 SPD-Hub, DDR5 PMIC, DDR5 temperature sensor, etc.), data sheets, electrical and thermal models as well as consultation on signal integrity and other technical support.

Via: JEDEC.org

Anton Shilov is the News Editor at AnandTech, Inc. For more than four years, he has been writing for magazines and websites such as AnandTech, TechRadar, Tom's Guide, Kit Guru, EE Times, Tech & Learning, EE Times Asia, Design & Reuse.