Copilot for Security is not an oxymoron – it's a potential game-changer for security-starved businesses

Defending at machine speed

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

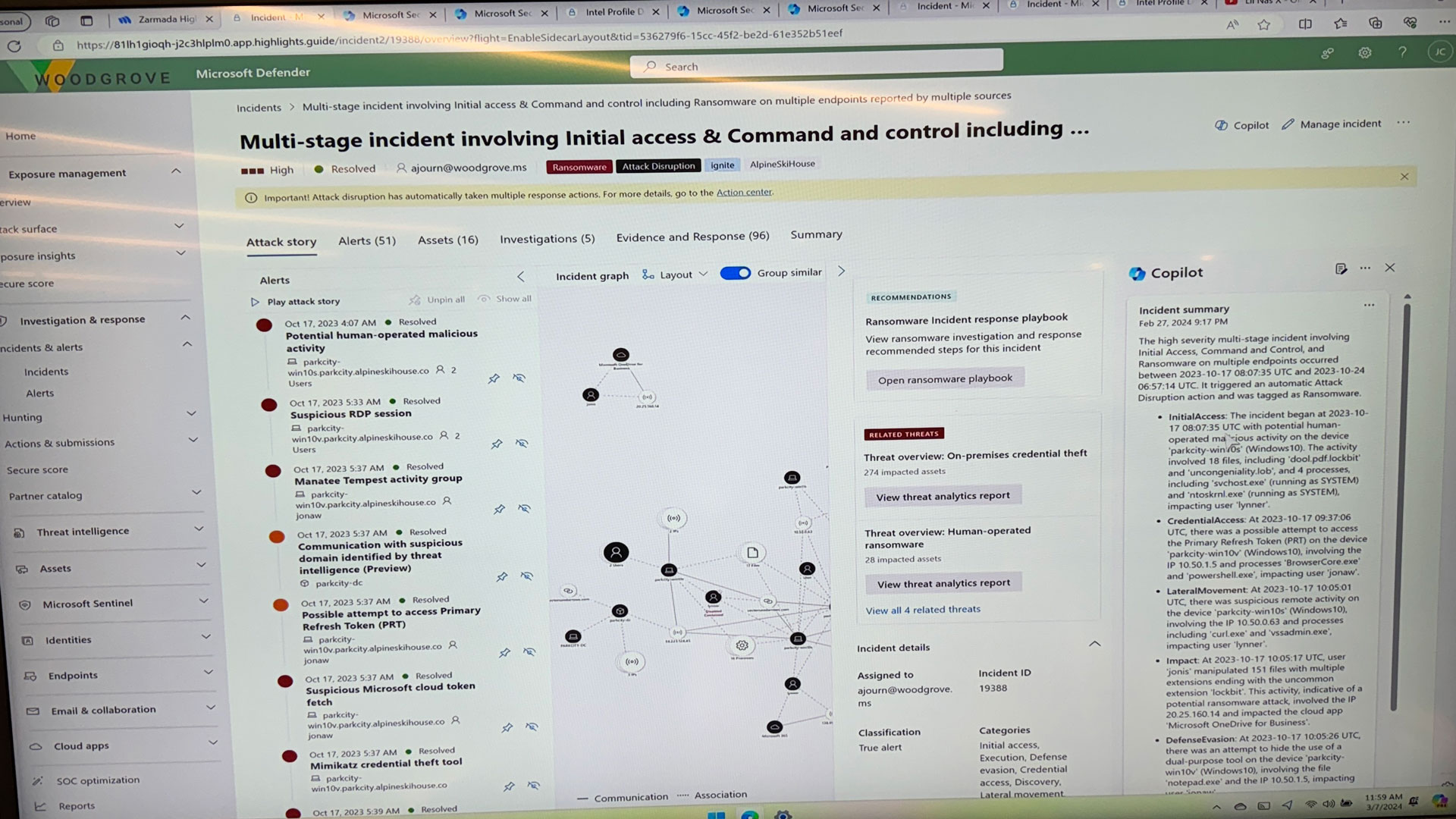

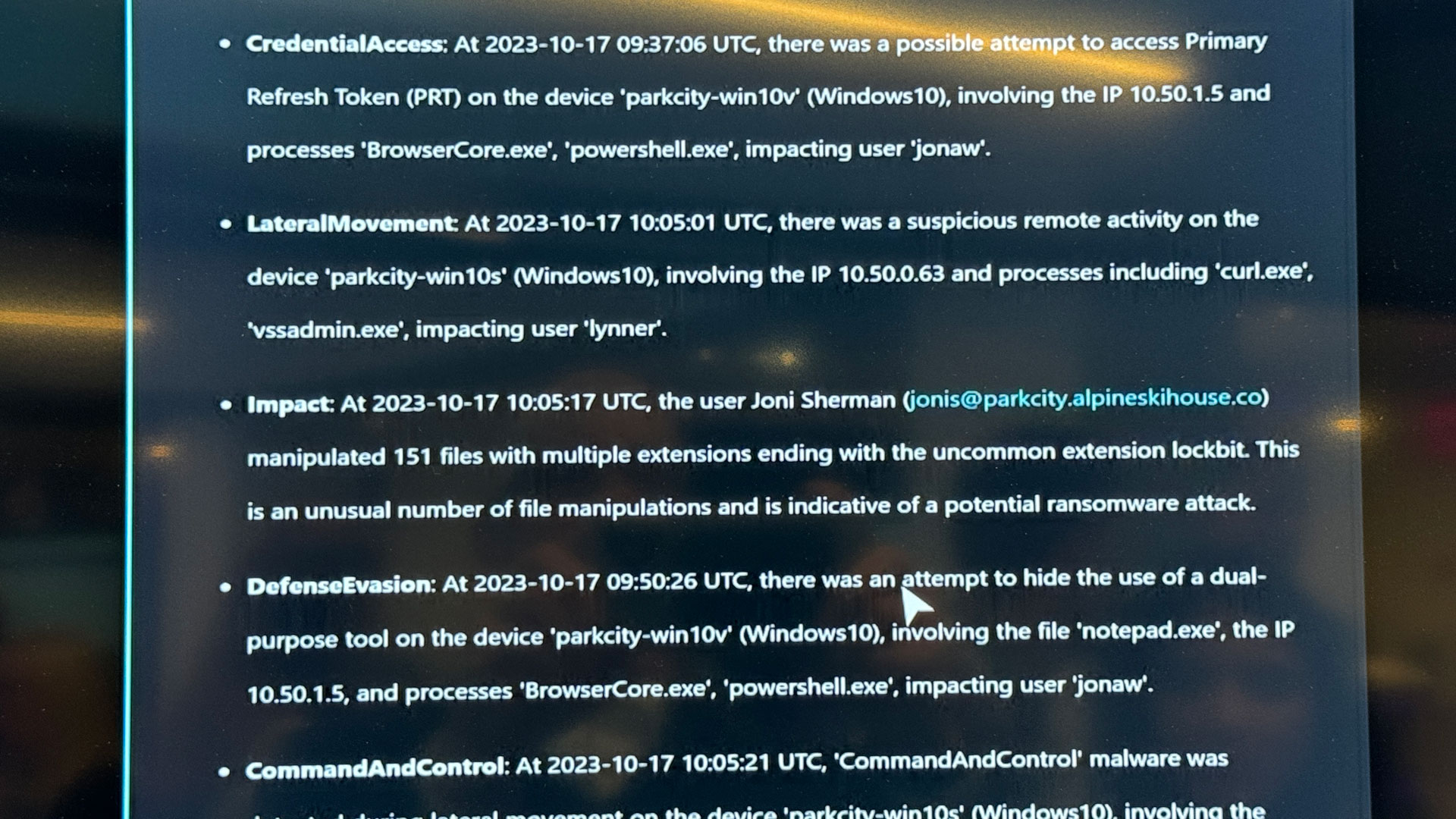

Picture this: you're a brand-new security operations coordinator at a major company that's been hit with dozens of daily ransomware attack attempts. You need to analyze, understand, and develop a threat defense plan – and all on your first day.

Naturally, Microsoft thinks generative AI can give a big assist here, and now, after a year of beta testing, Microsoft is officially rolling out Copilot for Security, a platform that might make that first day go much, much more smoothly.

In some ways, Copilot for Security (originally called 'Security Copilot') is like a bespoke version of the Copilot generative AI (based on GPT-4) you've seen in Windows, on Microsoft 365, and in the increasingly popular mobile app, but one with enterprise-level security on the brain.

When it comes to security, companies of all sizes need all the help they can get. According to Microsoft, there are 4,000 password attacks per second, and 300 unique nation-state crime actors. It takes, the company told us, just 72 minutes from the time someone in your organization clicks on a phishing link for one of these attackers to gain full access to your data. These attacks cost businesses trillions of dollars every year.

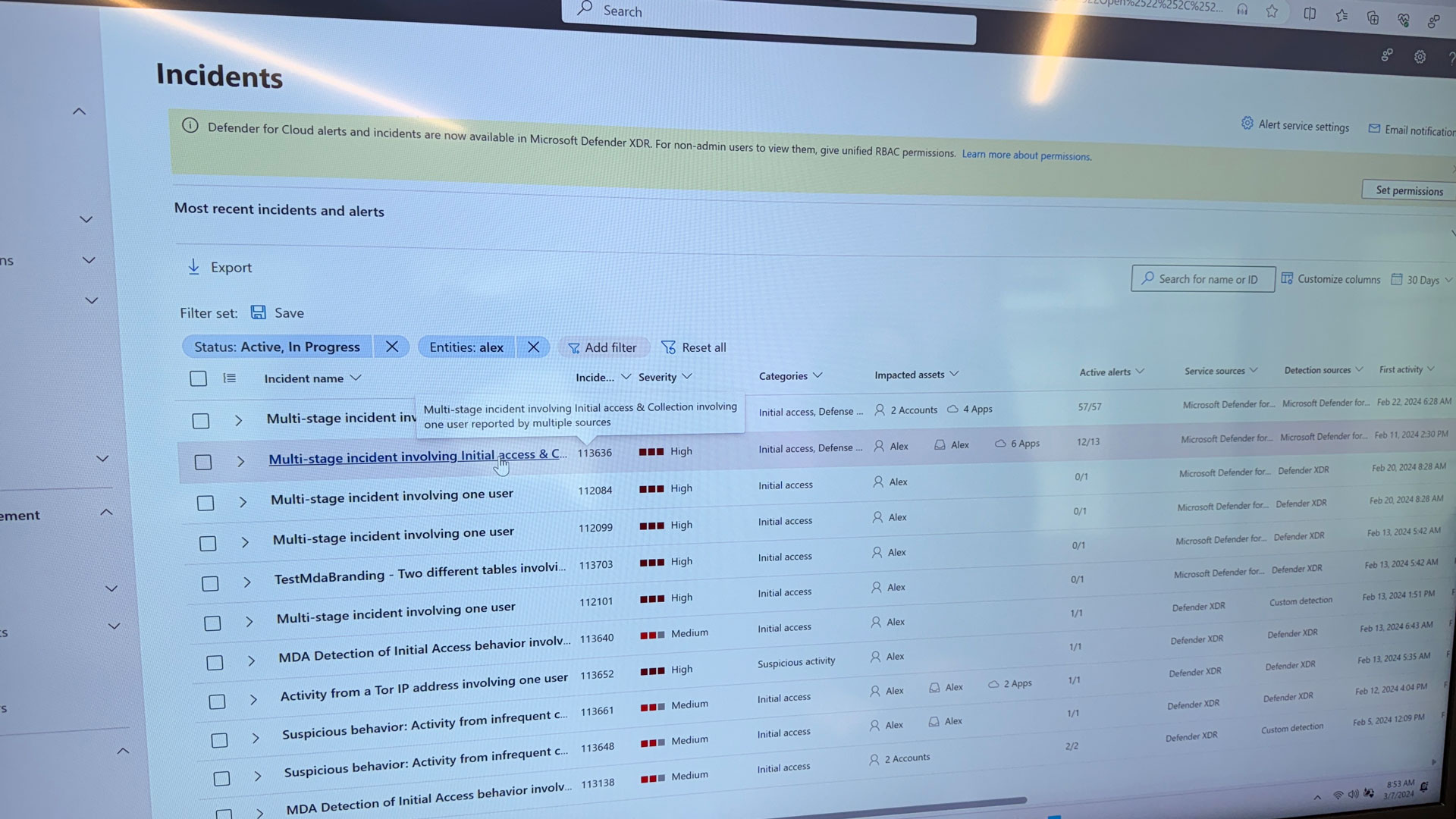

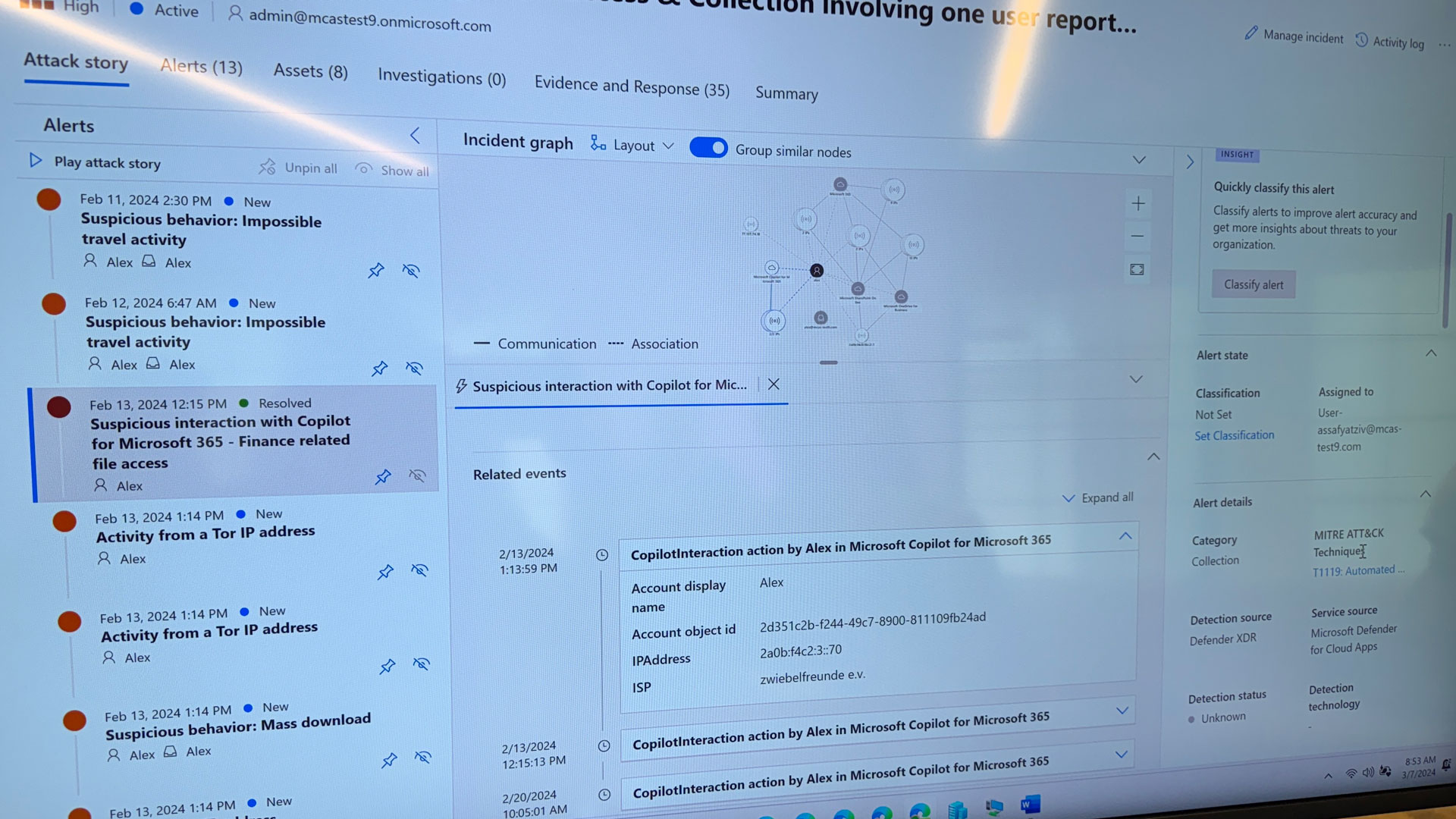

In demos, I was shown how Microsoft Copilot for Security acts as a sort of intense and ultra-fast security consultant, able to read through complex hash files and scripts to divine their true intent, and quickly recognize both known threats and things that act like existing threats. Microsoft claims that using such a service could help address the security personnel talent shortage.

The platform, which will be priced based on usage and the number of security compute units you use (Microsoft calls this a "pay-as-you-go" model") is decidedly not a doer. It will not, at this point, delete suspicious files or block emails. Instead, it aims to explain, guide and suggest. Plus, because it's a prompt-based system, so you can ask it questions specific to its analysis. If Greg in IT is found downloading or altering hundreds of files, you can ask for more details about his actions.

Microsoft Copilot for Security is designed to integrate with Microsoft tools, but will also work with a wide array of plugins.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

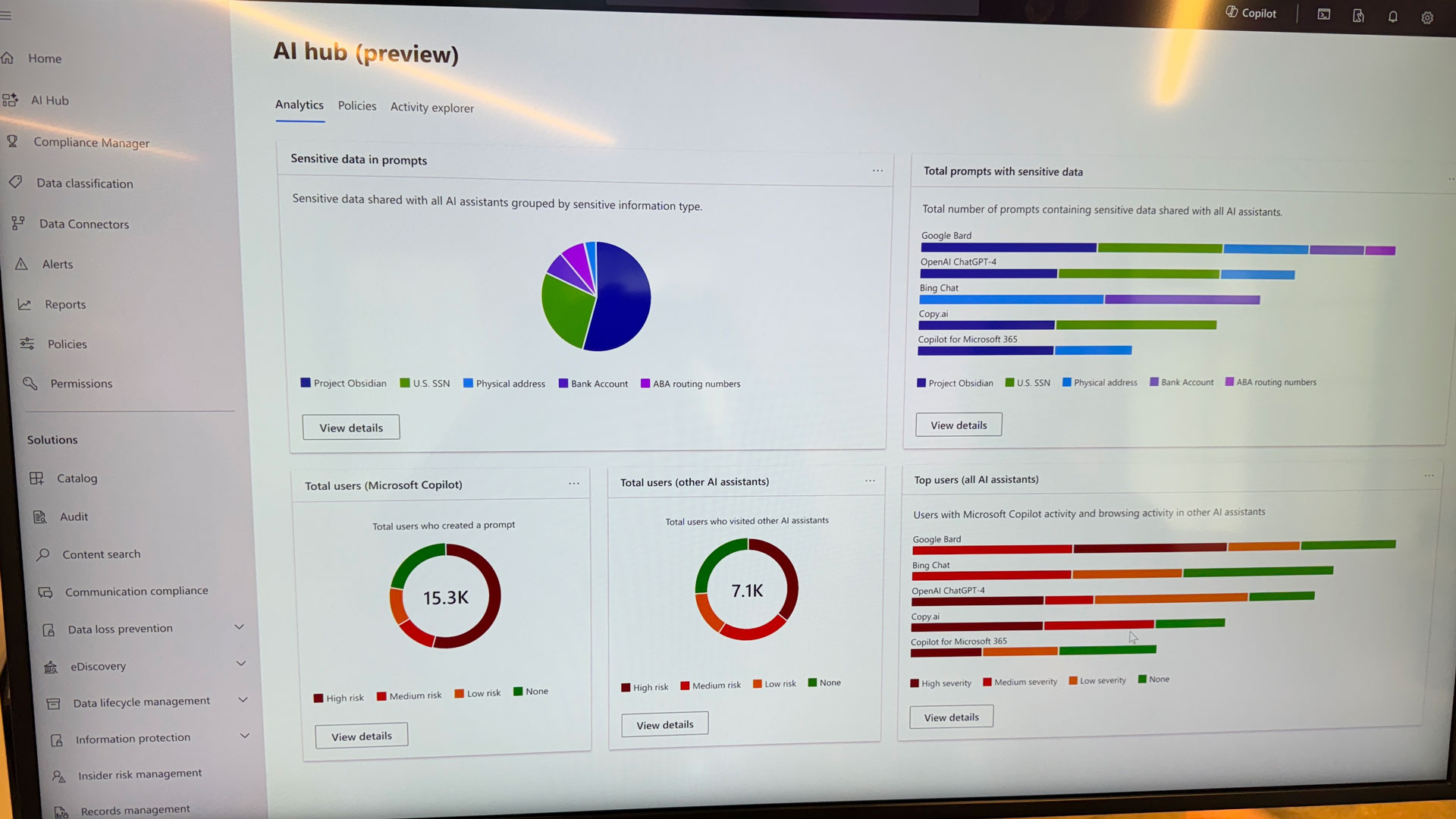

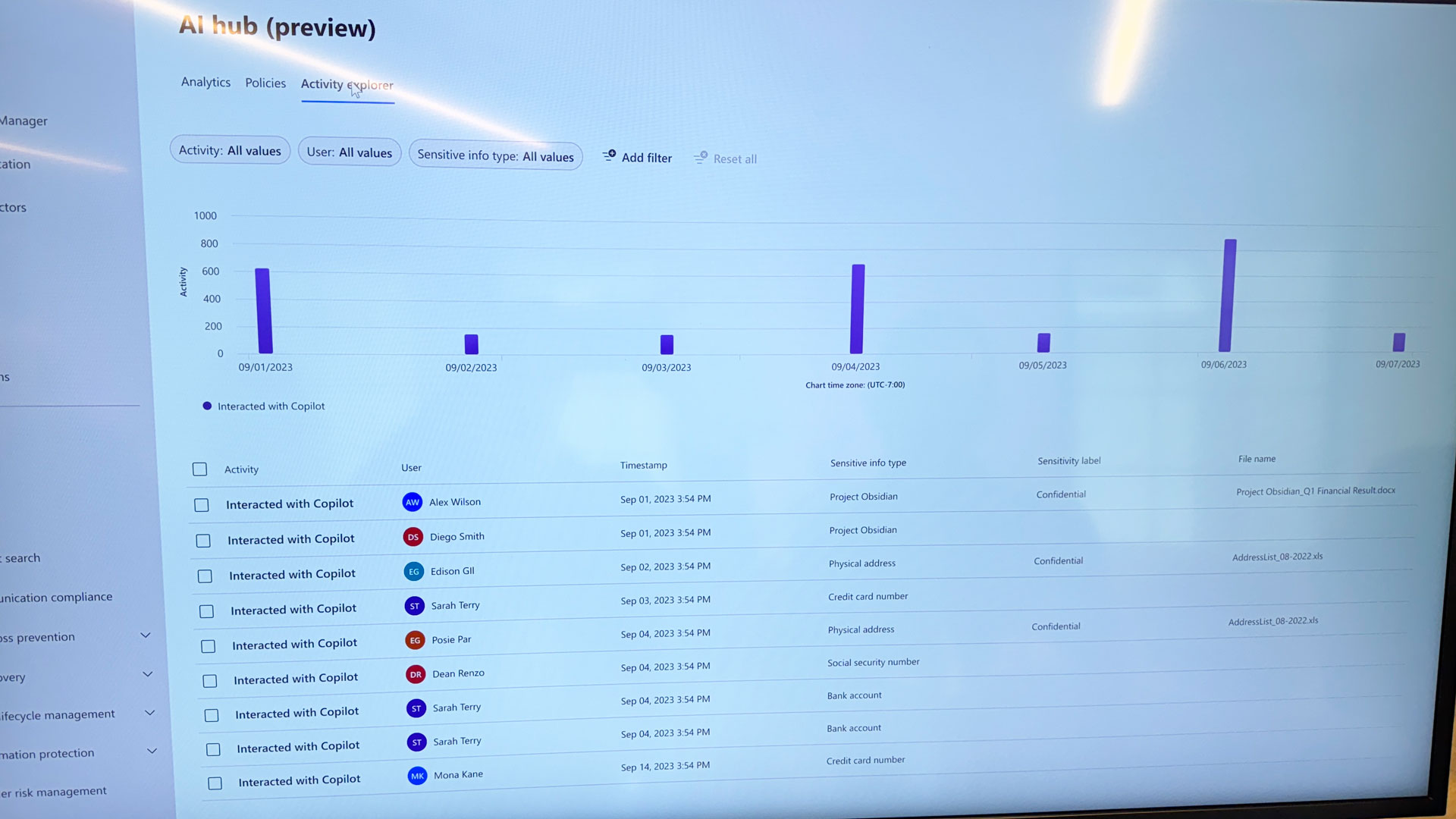

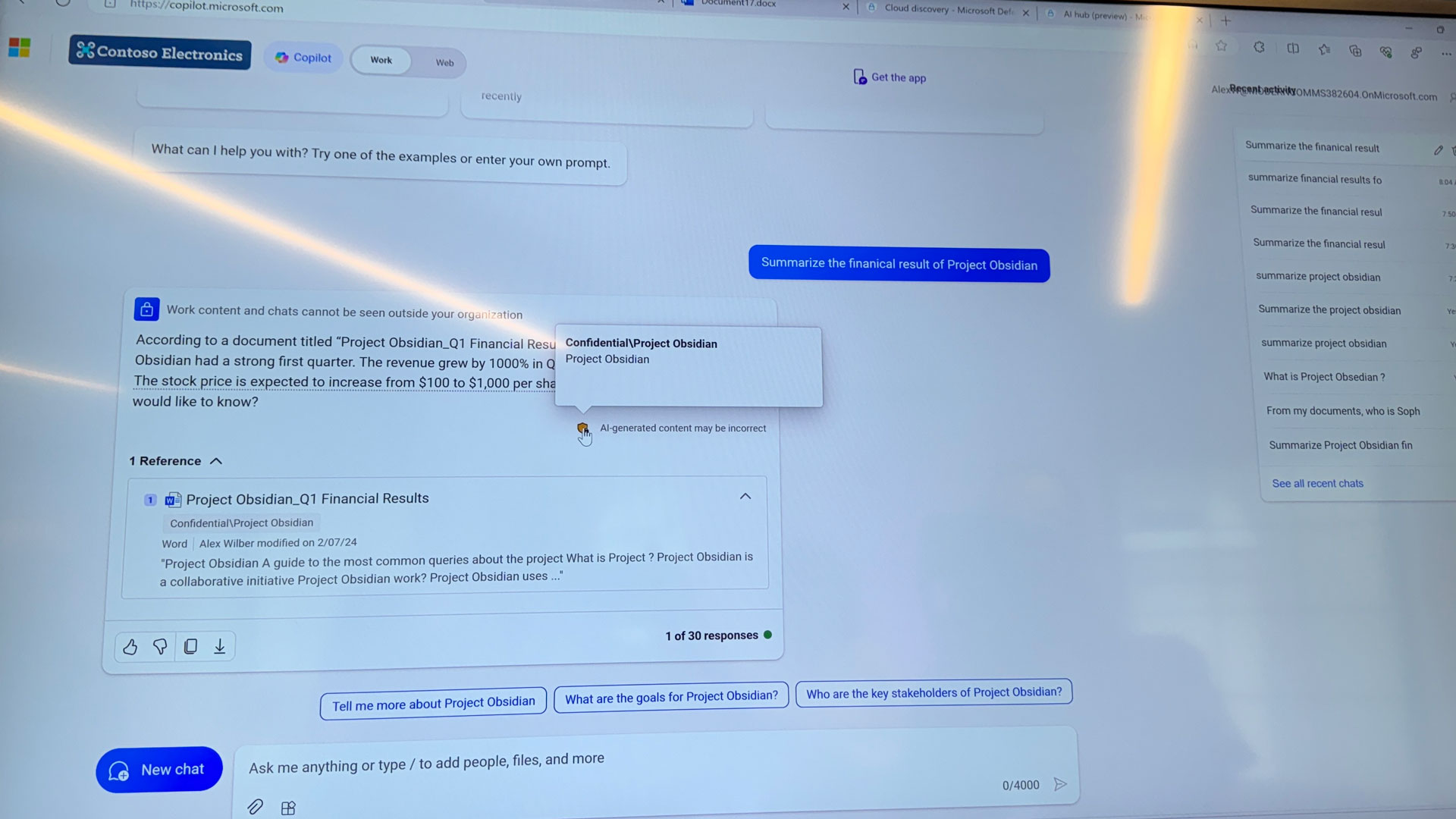

It can also vet other generative AI platforms, and even watch for when employees start sharing confidential, proprietary, or even encrypted company information with these chatbots. If you've set up permissions that say you don't want any such files shared with certain third-party chatbots, you can set that rule, and Copilot for Security will understand the file security, add a 'confidential' label, and auto-block sharing.

The benefit of using an AI is that you can analyze a threat using natural language, as opposed to digging through menus for the right tool or action. It becomes a give-and-take, with a powerful security-aware system that knows the context of your conversation, and can both dig in and guide you in real time.

Emphasis on assistance

Despite all this analysis and guidance, Microsoft Copilot for Security does not take action, and will turn to Windows Defender or other security systems for mitigation.

Microsoft claims that Copilot for Security can make almost any security professional more effective. The company has been beta-testing the platform for a year, and its initial findings are encouraging. For those just starting out in the industry, the company found that Copilot for Security helped them be 26% faster and 34% more accurate in their threat assessments, while those with experience were 2% faster and 7% more productive when using the platform.

More importantly, Microsoft claims the system is, compared to not using it, 46% more accurate when it comes to security summarization and incident analysis.

Copilot for Security enters general availability on April 1, and that's no joke.

You might also like

- The best internet security suites in so far

- WhatsApp's new security label will let you know if future third-party ...

- Ivanti tried to patch its VPN security flaws — but just found more ...

- QNAP warns its NAS devices are facing a critical security flaw — but ...

- Major security flaws found in Mercedes, Ferrari and other top luxury ...

A 38-year industry veteran and award-winning journalist, Lance has covered technology since PCs were the size of suitcases and “on line” meant “waiting.” He’s a former Lifewire Editor-in-Chief, Mashable Editor-in-Chief, and, before that, Editor in Chief of PCMag.com and Senior Vice President of Content for Ziff Davis, Inc. He also wrote a popular, weekly tech column for Medium called The Upgrade.

Lance Ulanoff makes frequent appearances on national, international, and local news programs including Live with Kelly and Mark, the Today Show, Good Morning America, CNBC, CNN, and the BBC.