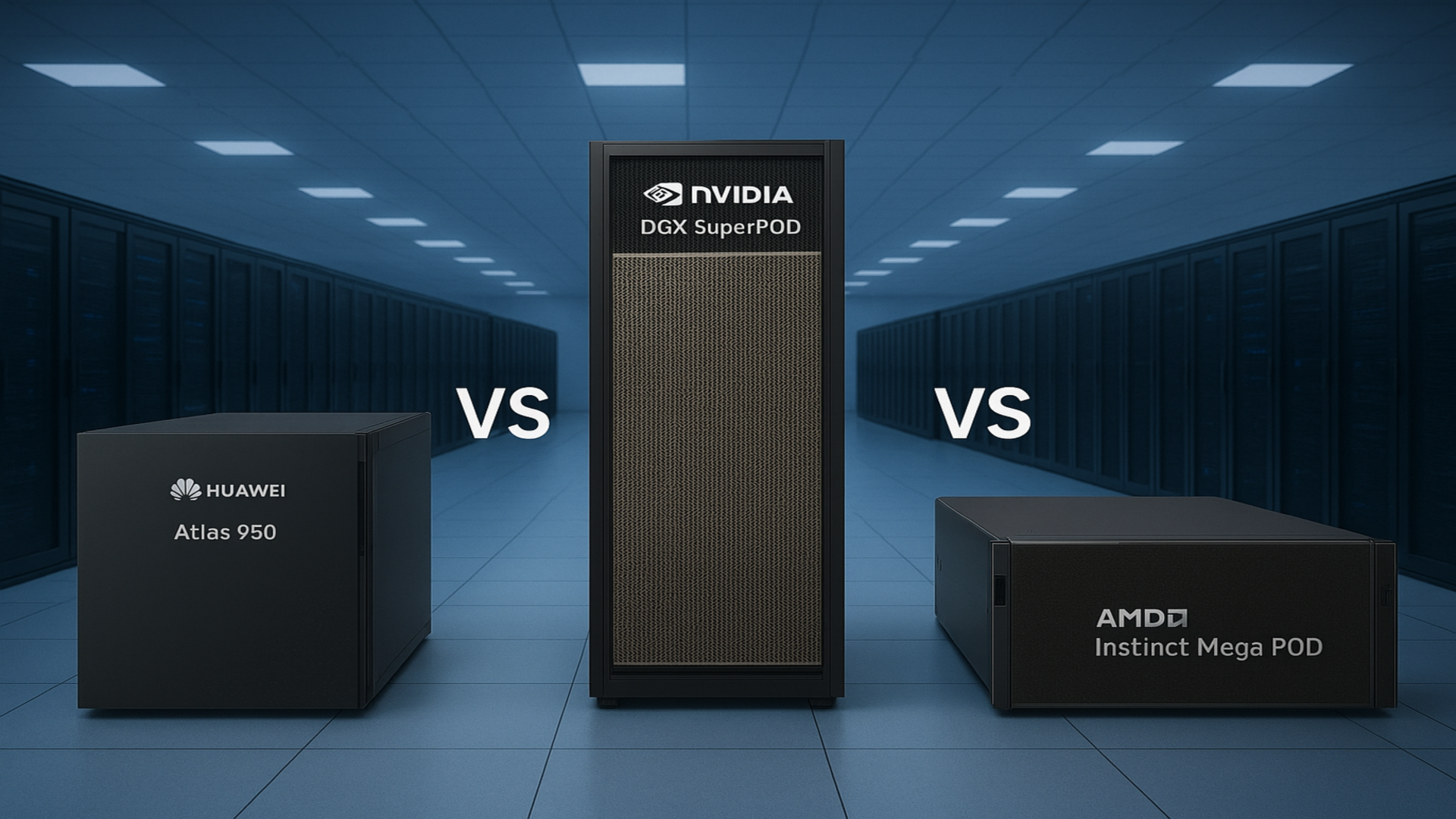

Huawei Atlas 950 SuperPoD vs Nvidia DGX SuperPOD vs AMD Instinct Mega POD: How do they compare?

Memory bandwidth defines the battle, fueling trillions of AI model parameters

- Huawei stacks thousands of NPUs to show brute-force supercomputing dominance

- Nvidia delivers polish, balance, and proven AI performance that enterprises trust

- AMD teases radical networking fabrics to push scalability into new territory

The race to build the most powerful AI supercomputing systems is intensifying, and major brands now want a flagship cluster that proves it can handle the next generation of trillion-parameter models and data-heavy research.

Huawei’s recently-announced Atlas 950 SuperPoD, Nvidia’s DGX SuperPOD, and AMD’s upcoming Instinct MegaPod each represent different approaches to solving the same problem.

They all aim to deliver massive compute, memory, and bandwidth in one scalable package, powering AI tools for generative models, drug discovery, autonomous systems, and data-driven science. But how do they compare?

Category | Huawei Ascend 950DT | NVIDIA H200 | AMD Radeon Instinct MI300 |

|---|---|---|---|

Chip Family / Name | Ascend 950 series | H200 (GH100, Hopper) | Radeon Instinct MI300 (Aqua Vanjaram) |

Architecture | Proprietary Huawei AI accelerator | Hopper GPU architecture | CDNA 3.0 |

Process / Foundry | Not yet publicly confirmed | 5 nm (TSMC) | 5 nm (TSMC) |

Transistors | Not specified | 80 billion | 153 billion |

Die Size | Not specified | 814 mm² | 1017 mm² |

Optimization | Decode-stage inference & model training | General-purpose AI & HPC acceleration | AI/HPC compute acceleration |

Supported Formats | FP8, MXFP8, MXFP4, HiF8 | FP16, FP32, FP64 (via Tensor/CUDA cores) | FP16, FP32, FP64 |

Peak Performance | 1 PFLOPS (FP8 / MXFP8 / HiF8), 2 PFLOPS (MXFP4) | FP16: 241.3 TFLOPS, FP32: 60.3 TFLOPS, FP64: 30.2 TFLOPS | FP16: 383 TFLOPS, FP32/FP64: 47.87 TFLOPS |

Vector Processing | SIMD + SIMT hybrid, 128-byte memory access granularity | SIMT with CUDA and Tensor cores | SIMT + Matrix/Tensor cores |

Memory Type | HiZQ 2.0 proprietary HBM (for decode & training variant) | HBM3e | HBM3 |

Memory Capacity | 144 GB | 141 GB | 128 GB |

Memory Bandwidth | 4 TB/s | 4.89 TB/s | 6.55 TB/s |

Memory Bus Width | Not specified | 6144-bit | 8192-bit |

L2 Cache | Not specified | 50 MB | Not specified |

Interconnect Bandwidth | 2 TB/s | Not specified | Not specified |

Form Factors | Cards, SuperPoD servers | PCIe 5.0 x16 (server/HPC only) | PCIe 5.0 x16 (compute card) |

Base / Boost Clock | Not specified | 1365 / 1785 MHz | 1000 / 1700 MHz |

Cores / Shaders | Not specified | CUDA: 16,896, Tensor: 528 (4th Gen) | 14,080 shaders, 220 CUs, 880 Tensor cores |

Power (TDP) | Not specified | 600 W | 600 W |

Bus Interface | Not specified | PCIe 5.0 x16 | PCIe 5.0 x16 |

Outputs | None (server use) | None (server/HPC only) | None (compute card) |

Target Scenarios | Large-scale training & decode inference (LLMs, generative AI) | AI training, HPC, data centers | AI/HPC compute acceleration |

Release / Availability | Q4 2026 | Nov 18, 2024 | Jan 4, 2023 |

The philosophy behind each system

What makes these systems fascinating is how they reflect the strategies of their makers.

Huawei is leaning heavily on its Ascend 950 chips and a custom interconnect called UnifiedBus 2.0 - the emphasis is on building out compute density at an extraordinary scale, then networking it together seamlessly.

Nvidia has spent years refining its DGX line and now offers the DGX SuperPOD as a turnkey solution, integrating GPUs, CPUs, networking, and storage into a balanced environment for enterprises and research labs.

AMD is preparing to join the conversation with the Instinct MegaPod, which aims to scale around its future MI500 accelerators and a brand-new networking fabric called UALink.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

While Huawei talks about exaFLOP levels of performance today, Nvidia highlights a stable, battle-tested platform, and AMD pitches itself as the challenger offering superior scalability down the road.

At the heart of these clusters are heavy-duty processors built to deliver immense computational power and handle data-intensive AI and HPC workloads.

Huawei’s Atlas 950 SuperPoD is designed around 8,192 Ascend 950 NPUs, with reported peaks of 8 exaFLOPS in FP8 and 16 exaFLOPS in FP16 - so it is clearly aimed at handling both training and inference at an enormous scale.

Nvidia’s DGX SuperPOD, built on DGX A100 nodes, delivers a different flavor of performance - with 20 nodes containing a total of 160 A100 GPUs, it looks smaller in terms of chip count.

However, each GPU is optimized for mixed precision AI tasks and paired with high-speed InfiniBand to keep latency low.

AMD’s MegaPod is still on the horizon, but early details suggest it will pack 256 Instinct MI500 GPUs alongside 64 Zen 7 “Verano” CPUs.

While its raw compute numbers are not yet published, AMD’s goal is to rival or exceed Nvidia’s efficiency and scale, especially as it uses next-generation PCIe Gen 6 and 3-nanometer networking ASICs.

Feeding thousands of accelerators requires staggering amounts of memory and interconnect speed.

Huawei claims the Atlas 950 SuperPoD carries more than a petabyte of memory, with a total system bandwidth of 16.3 petabytes per second.

This kind of throughput is designed to keep data moving without bottlenecks across its racks of NPUs.

Nvidia’s DGX SuperPOD does not attempt to match such headline numbers, instead relying on 52.5 terabytes of system memory and 49 terabytes of high-bandwidth GPU memory, coupled with InfiniBand links of up to 200Gbps per node.

The focus here is on predictable performance for workloads that enterprises already run.

AMD, meanwhile, is targeting the bleeding edge with its Vulcano switch ASICs offering 102.4Tbps capacity and 800Gbps per tray external throughput.

Combined with UALink and Ultra Ethernet, this suggests a system that will surpass current networking limits once it launches in 2027.

One of the biggest differences between the three contenders lies in how they are physically built.

Huawei’s design allows for expansion from a single SuperPoD to half a million Ascend chips in a SuperCluster.

There are also claims that an Atlas 950 configuration could involve more than a hundred cabinets spread over a thousand square meters.

Nvidia’s DGX SuperPOD takes a more compact approach, with its 20 nodes integrated in a cluster style that enterprises can deploy without needing a stadium-sized data hall.

AMD’s MegaPod splits the difference, with two racks of compute trays plus one dedicated networking rack, showing that its architecture is centered around a modular but powerful layout.

In terms of availability, Nvidia’s DGX SuperPOD is already on the market, Huawei’s Atlas 950 SuperPoD is expected in late 2026, and AMD’s MegaPod is planned for 2027.

That said, these chips are fighting very different battles under the same banner of AI supercomputing supremacy.

Huawei’s Atlas 950 SuperPoD is a show of brute force, stacking thousands of NPUs and jaw-dropping bandwidth to dominate at scale, but its size and proprietary design may make it harder for outsiders to adopt.

Nvidia’s DGX SuperPOD looks smaller on paper, yet it wins on polish and reliability, offering a proven platform that enterprises and research labs can plug in today without waiting for promises.

AMD’s MegaPod, still in development, has the makings of a disruptor, with its MI500 accelerators and radical new networking fabric that could tilt the balance once it arrives, but until then, it is a challenger talking big.

Via Huawei, Nvidia, TechPowerUp

You might also like

- Here are the best mobile workstations around today

- We've also listed the best monitors for every budget and resolution

- AI > Crypto - Bitcoin mining spinoff gets $700 million investment from Nvidia

Efosa has been writing about technology for over 7 years, initially driven by curiosity but now fueled by a strong passion for the field. He holds both a Master's and a PhD in sciences, which provided him with a solid foundation in analytical thinking. Efosa developed a keen interest in technology policy, specifically exploring the intersection of privacy, security, and politics. His research delves into how technological advancements influence regulatory frameworks and societal norms, particularly concerning data protection and cybersecurity. Upon joining TechRadar Pro, in addition to privacy and technology policy, he is also focused on B2B security products. Efosa can be contacted at this email: udinmwenefosa@gmail.com

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.