This open source ChatGPT alternative isn’t for everyone

Users new to AI tools are probably out of luck

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

A new text-generating language model, combining Google’s own PaLM model and a technique known as Reinforcement Learning with Human Feedback to create an open source tool that, in theory, can do anything OpenAI's ChatGPT can.

For most, however, this will remain a theory. Unlike ChatGPT, AI developer Philip Wang’s PaLM + RLHF doesn’t come trained on any text data required for the model to learn from. Users must compile their own data corpuses and use their own hardware to train the model and process requests.

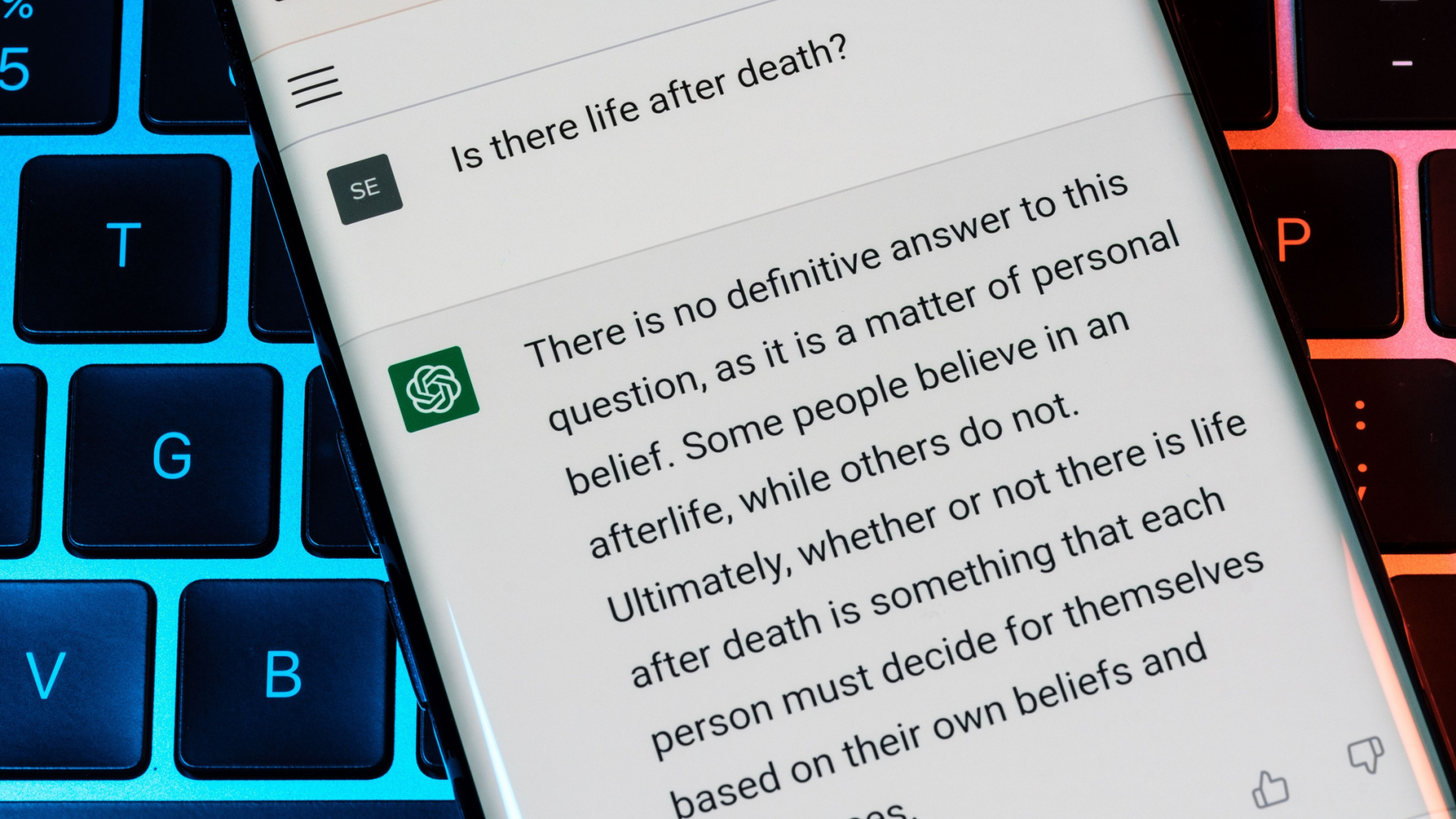

Text generation models that respond to human inputs, like ChatGPT and PaLM + RLHF, are the latest craze in artificial intelligence. Simply put, they predict the appropriate words after learning semantic patterns from an existing data set, which could consist of anything from ebooks to internet flame wars.

Creating accessible artificial intelligence

Despite PaLM + RLHF arriving pre-trained, the Reinforcement Learning with Human Feedback technique is designed to produce a more intuitive user experience.

As explained by TechCrunch, RLHF trains a language model by producing a wide range of responses to a human prompt, which are then ranked by human volunteers. Those rankings are then used to train a “reward model”, which sorts the responses by order of preference.

This is not a cheap process, which will prevent all but the wealthiest of AI enthusiasts from training the model. PaLM has 540 billion components of the language model (or parameters) that must be trained on data, and a 2020 study revealed that training only a 1.6 billion parameter model would cost anywhere from $80,000 to $1.6 million.

Right now, it seems as though we’re relying on a wealthy benefactor to get involved and train and release the model to the public. Such reliances have not ended well before, but there are existing efforts by other companies looking to replicate ChatGPT’s capabilities and release them as free software.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Research groups CarperAI and EleutherAI are partnering with the startups Scale AI and Hugging Face to release the first language model trained with human feedback that’s ready to run out of the box.

And, although it’s not quite ready yet, LAION, the company that supplied the training data set for “machine learning, text-to-image” model Stable Diffusion, have created a similar project on GitHub that wants to supercede OpenAI by allowing it to use APIs, compile its own research, and allow for user personalization, all while being optimized for consumer hardware.

- Here’s our list of the best productivity tools right now

Luke Hughes holds the role of Staff Writer at TechRadar Pro, producing news, features and deals content across topics ranging from computing to cloud services, cybersecurity, data privacy and business software.