Think outside the computer

Looking to nature for inspiration about the computing systems of tomorrow

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

An ant weighing just 1 or 2 milligrams, will navigate around obstacles and hunt for signals with a level of skill and speed that puts our most sophisticated robots to shame... And yet, with all that apparent intelligence, a few isolated ants will meander aimlessly – until the number of ants increases beyond a couple of dozen.

With that, a higher level of intelligence starts to emerge. As the population rises, the transformation becomes staggering. Millions of ants can build “cities” with complex ventilation systems, sewers and recycling facilities. Ants are the only creatures, other than humans, to practice intensive farming. They cultivate living crops, they breed and herd aphids and other insects, “milking” them for food. Ant groups communicate, teach, form teams and go to war.

In my keynote presentation at NetEvents May 2019 EMEA IT Spotlight in Barcelona, I compared the behaviour of ants to the most advanced data centers, and the higher-level intelligence needed for effective digital transformation. Such dynamically responsive intelligence does not reside merely in a CPU, or in a server or storage box, or the network, or any individual application. Rather today’s problems that crunch massive native sets need to be optimized across all these elements to create a computer at the data center level.

- Nvidia closes in on Mellanox deal in data center push

- Beyond virtualization: improving data center energy efficiency through adept management

- GDPR and data center management

Just as the ant colony acts as a highly functional organism without centralized control, similarly there is no single governing program in the latest machine-learning models. Instead the data itself drives the processes in a data center made of compute and storage elements connected by an intelligent network. This “deep learning” is also comparable to the human brain – a huge network of relatively small processing elements called synapses that actually process data as it moves across the network.

A data deluge

To set the scene I used an illustration of the transformation in the very nature of data acquisition. In 2007 Nokia, a $150 billion enterprise decided to invest in the nascent automobile SatNav market. They acquired Navitech, a company with about five million traffic cameras across Europe, realising that a navigation system that kept up to date with real time traffic conditions would offer a major competitive advantage.

In the same year, an Israeli company called Waze was started, with a similar objective – except that Waze gathered the same data not via installing millions of traffic sensors but with an app in every users’ phone. This allowed Waze to quickly deploy tens of millions of traffic sensors at no cost, by leveraging the GPS location chips present in every smart phone, and harvest traffic movement data and upload it to the Waze system. The rest is history: in five or six years Nokia had shrunk to less than the buying price of Navitech, while Waze was snapped up by Google.

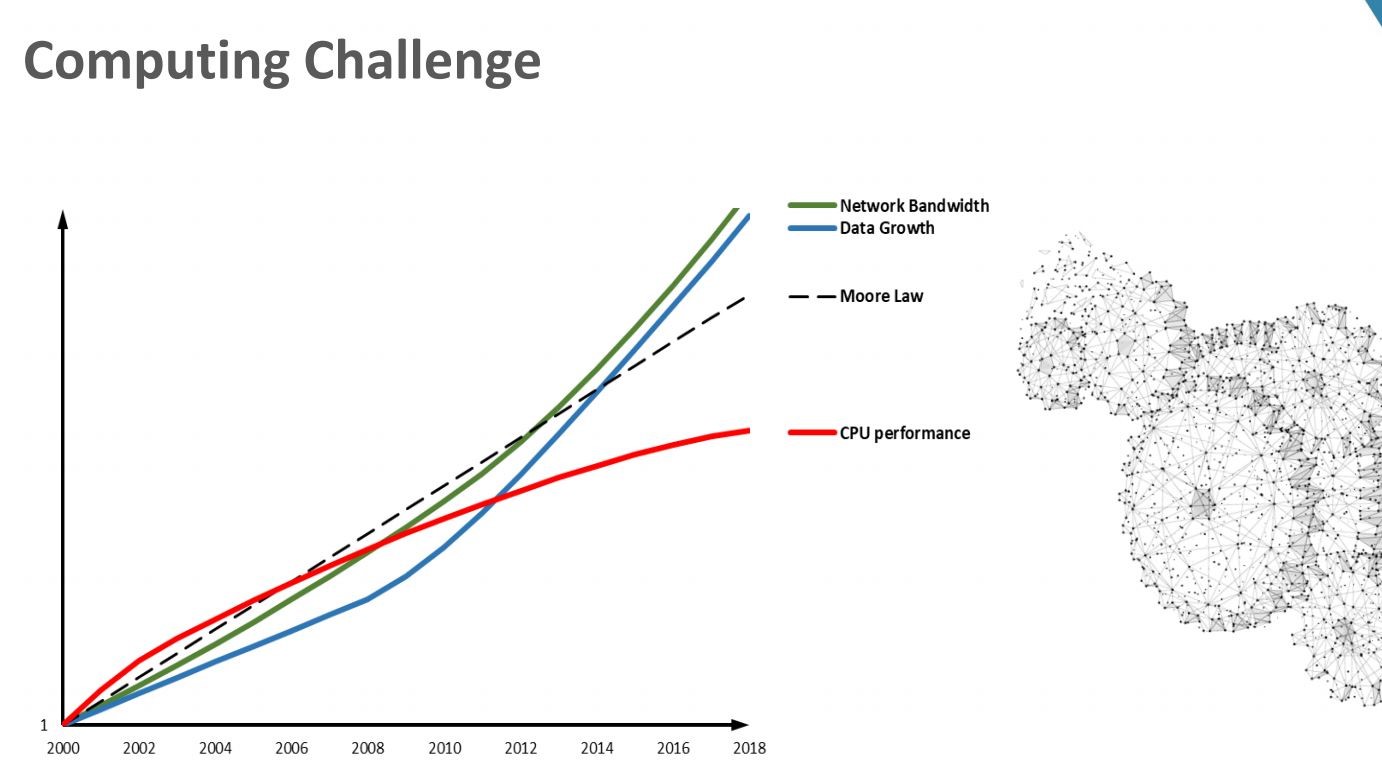

A nice story, reflecting a fundamental shift from going out to collect data to allowing data to pour in: the difference between gathering water from a well and harvesting rainfall across the land. We see some of the impact of this in my chart above where the blue, Data Growth, line grows steeper soon after 2007 and soars upward. Also shown is a straight line representing the linear growth in processing power promised by Moore’s Law, and a red line showing the actual CPU performance is slowing, as we approach the limits of silicon potential. But notice how the green line, representing Network Bandwidth, is pretty well aligned with Data Growth. The old CPU-centric thinking is failing us, and we need to think outside the box – and Think Outside the Computer to tackle the big problems facing businesses today.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Today no single individual processing unit is enough to crunch the massive data sets needed to generate actionable business value. Instead what is required is to optimize compute, storage, network, and application elements across the entire stack; and make them work together to create a single data center level computer to deliver services across the cluster. Instead of a human programming a machine, it is the data that programs the machine. Just as the massive data input from the experience of millions of ants will inform and generate intelligent and constructive project developments, so will machine learning mine meaning and relevance from a mountain of IoT data, and create new, productive applications.

From crowd to cloud

This is related to cloud which also changes the delivery model of computing. Cloud is moving from a server to a service approach. If you have “electricity in your home”, it means that you have plugs from which you can obtain electricity like turning on a tap to a water main. If you have “a computer in your home” it traditionally meant that you owned a box full of computer power – and you were responsible for keeping everything on that box running smoothly. But Cloud computing brings us closer to the electrical utility model, where the computer is no longer on your desk and becomes more like a socket where you can access services. We need to expand our thinking outside the computer to understand the implications of this change.

If your communications thinking was limited to a carrier pigeon to post messages, then your creative imagination might be limited to a single dimension: the search for faster pigeons. But today’s video conferencing offers so much more than mere speed of communication: it offers simultaneous visibility and sound from several locations, even the possibility of machine translation between languages. Thus, to make a truly quantum leap in performance requires that you think out of the box.

A good example is the work we’ve done with Oracle to transform their clustered database platforms. Initially they were using conventional networking technology, but no matter how much they tuned their system the communications software overhead was still the bottleneck and limited performance improvements. But once they embraced a more intelligent network with RDMA (Remote Direct Memory Access), they were able to make a break through. Using this technology removes the networking overhead and makes access remote resources similar to the cost of accessing a local resource.

Oracle took advantage of this technology to enable much more efficient use of all system resources. With the new redesigned Oracle system, they increased their network bandwidth from 10 to 40 Gb/s, a substantial 4X improvement in bandwidth. But by adopting RDMA technology and eliminating the traditional network software overhead they achieved not just a 4-fold increase in speed, but a 50-fold performance boost - thanks to better, more efficient use of intelligent networking.

The intelligent network

So, it's not just about how fast you move the data through the cables. Networks are getting more intelligent everywhere. The secret is to process the data while it moves. Each ant in the colony receives data from its own senses, plus data transmitted via scent signals from other ants. It processes that data itself and transmits its own scent signals that continue to cascade through the network, accumulating significance and applicability for the colony. Similarly, in our most advanced network products we have computational units within every switch, so we can do data aggregation on the fly.

This technology is being used today in HPC and machine learning. When we do neural network training on data sets across multiple instances of the neural network model, after individual training, there is a consolidation of the training results that usually takes as much time as training itself. Distributing this processing speeds up the “parameter server” process by 10 fold which can accelerate the training process from days to hours, or from weeks to days.

We call it SHARP – Scale Hierarchical Aggregation and Reduction Protocol. While other networks move data between compute elements, SHARP will process and compute data as it traverses the network – effectively turning the network itself into a powerful co-processor delivering a significant increase in application performance.

For storage networking we offer SNAP – Software Defined Networking for Accelerated Processing. Our Bluefield SmartNIC Virtualization presents resources in your cloud as a true local device – not a networked device requiring APIs changes on the host. SNAP works on machines with legacy operating systems, you see a local device that can magically touch everything. So with our SmartNIC technologies we can allocate resources from different machines on the network and make them available as local storage devices, or local storage services on the local machine. We have a pilot project with major cloud providers right now, and plan to move to production with a year.

Another example of more efficient use of resources: network function virtualization is a powerful technique to reduce box clutter by consolidating processes onto bare metal servers. But it puts the load onto servers, making the data center less efficient from the application perspective, because it depletes computing power. You can, however, off-load a significant part of the network virtualisation operation to the smart network adaptors – SmartNICs.

Can it be secure?

But can this be done without compomising security? The traditional data center relied on an M&M security model – hard on the outside, soft on the inside –protection on the periphery of the data center, but inside it is not protected. In the cloud space, we invite applications we do not control to run on the same machine that runs my security policy. Once malware runs on my compute server, it can take over the security policy, and so take over my data center. The entire data center is taken over by one guy that I invited to run on my machine.

In order to protect our infrastructure, we must ensure that attacker and the victim are not running on the same computer. We need to change the security model, from being soft inside to hard inside. Every single machine in the data center must be protected.

The same BlueField technology that implements SNAP allows security policy to be hosted on the BlueField Card with its own operating system – making it distinct from the application server. With BlueField we can totally segregate the infrastructure computing and the application computing tiers. We can also upgrade the compute server and the infrastructure server completely independently – they are not connected. It is both more secure and much more efficient.

Thinking outside the computer

That is how it is and will increasingly become: a flood of unstructured data demanding machine-learning capabilities, resulting in new applications beyond our dreams.

An estimated $4 trillion revenue opportunity lies out there. It is challenging us to think outside the computer.

Michael Kagan, Chief Technology Officer at Mellanox

- We've also highlighted the best cloud computing services