Meta showcases the hardware that will power recommendations for Facebook and Instagram — low-cost RISC-V cores and mainstream LPDDR5 memory are at the heart of its MTIA recommendation inference CPU

Meta also details the problems it has faced using GPUs

Meta unveiled its first-generation in-house AI inference accelerator designed to power the ranking and recommendation models that are key components of Facebook and Instagram back in 2023.

The Meta Training and Inference Accelerator (MTIA) chip, which can handle inference but not training, was updated in April, and doubled the compute and memory bandwidth of the first solution.

At the recent Hot Chips symposium last month, Meta gave a presentation on its next-generation MTIA and admitted using GPUs for a recommendation engines is not without challenges. The social media giant noted that peak performance doesn't always translate to effective performance, large deployments can be resource-intensive, and capacity constraints are exacerbated by the growing demand for Generative AI.

Mysterious memory expansion

Taking this into account, Meta's development goals for the next generation of MTIA include improving performance per TCO and per watt compared to the previous generation, efficiently handling models across multiple Meta services, and enhancing developer efficiency to quickly achieve high-volume deployments.

Meta's latest MTIA gains a significant boost in performance with GEN-O-GEN, which increases GEMM TOPs by 3.5x to 177 TFLOPS at BF16, hardware-based tensor quantization for accuracy comparable to FP32, and optimized support for PyTorch Eager Mode, enabling job launch times under 1 microsecond and job replacement in less than 0.5 microseconds. Additionally, TBE optimization enhances embedding indices' download and prefetch times, achieving 2-3x faster run times compared to the previous generation.

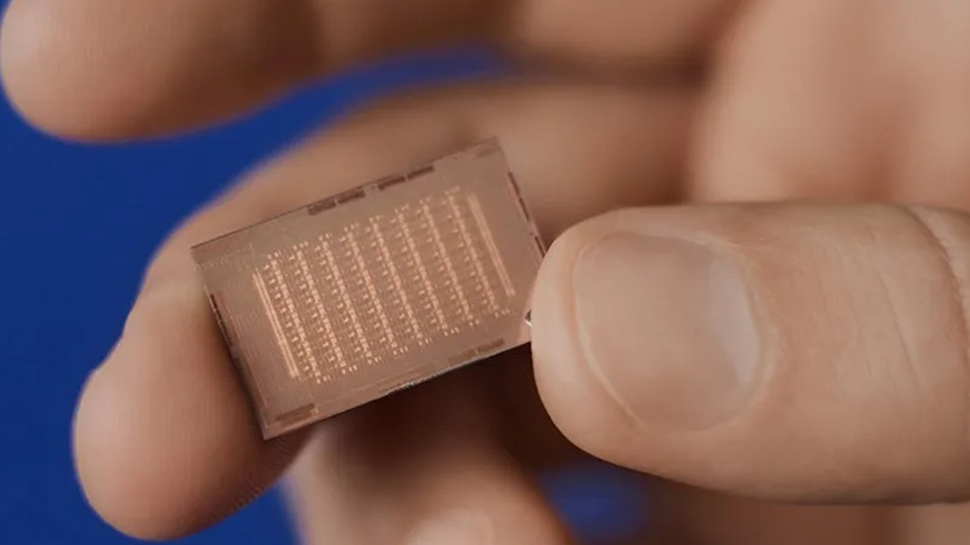

The MTIA chip, built on TSMC's 5nm process, operates at 1.35 GHz with a gate count of 2.35 billion and offers 354 TOPS (INT8) and 177 TOPS (FP16) GEMM performance, utilizing 128GB LPDDR5 memory with a bandwidth of 204.8GB/s, all within a 90-watt TDP.

The Processing Elements are built on RISC-V cores, featuring both scalar and vector extensions, and Meta's accelerator module includes dual CPUs. At Hot Chips 2024, ServeTheHome noticed a Memory Expansion linked to the PCIe switch and the CPUs. When asked if this was CXL, Meta rather coyly said, “it is an option to add memory in the chassis, but it is not being deployed currently.”

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

More from TechRadar Pro

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.