ChatGPT threatens education at all levels - here's how it can be stopped

If students can cheat using ChatGPT, then what can deter them from using it?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

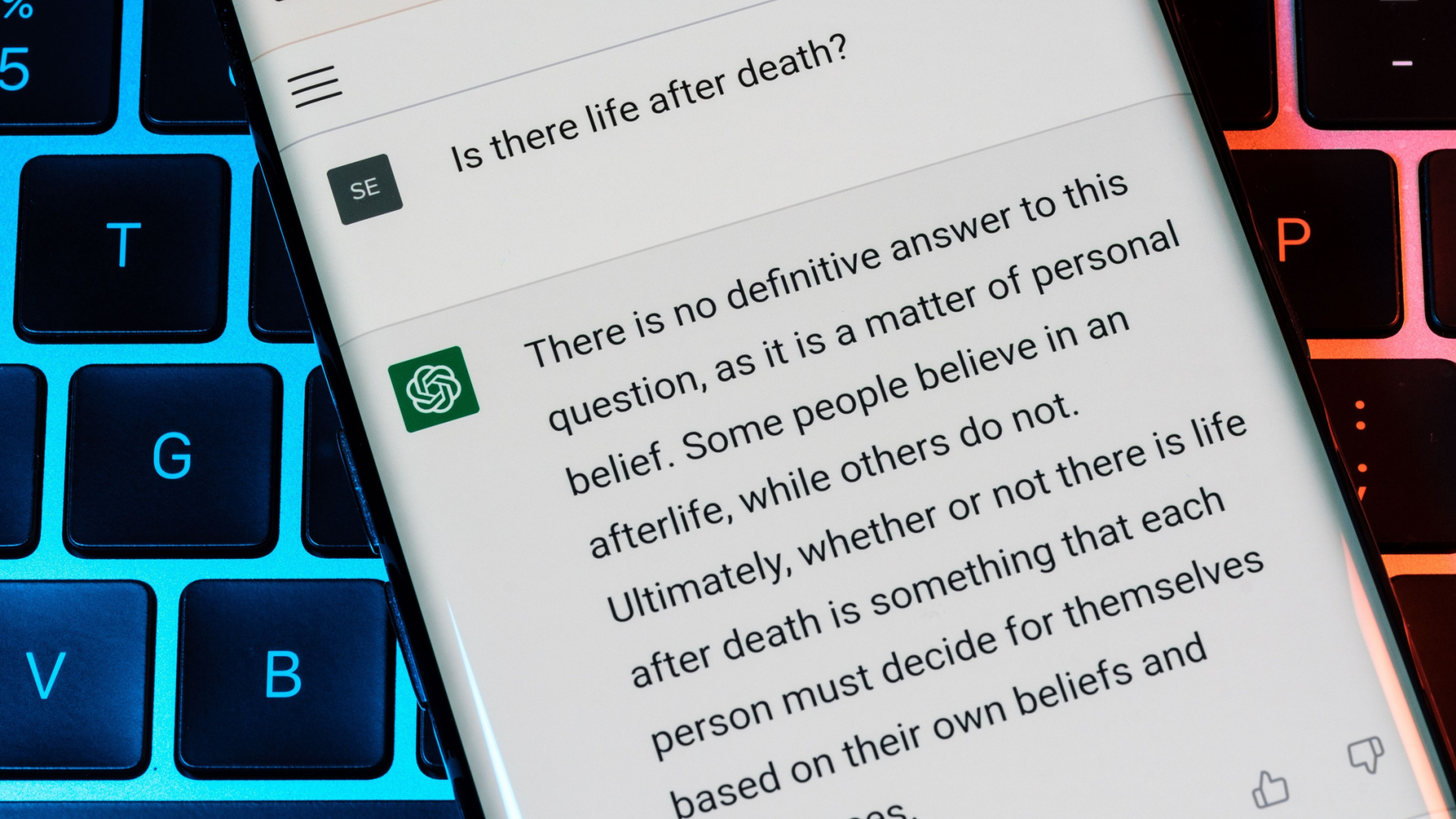

As ChatGPT continues to pique the curiosity of people at all stages of life, it was perhaps only a matter of time before its considerable powers of eloquence were put to mischievous use, especially in the education system.

At the time of going to press, the prominent chatbot and AI writer has been banned in educational institutions around the world, from high schools across America and Australia to universities in France and India, with some university professors having caught their students using ChatGPT to write their entire assignments.

Cheating at school is, of course, nothing new. Plagiarism is likely as old as education itself, with students copying the work of other students, directly from textbooks or from the internet. What is new, however, is the ease and speed at which content can now be generated thanks to AI writers.

Moreover, the content is in a sense original, since the AI is creating its own phrasing, not merely pasting wholesale from its training data. This makes it harder to tell when a student has feigned their own work, and poses a real problem to the already limited resources of most educational institutions worldwide.

ChatGPT will output a stream of seemingly well-written content on just about any topic you care to ask it. A simple prompt is all that’s required; just like the questions students are given to write about in their assignments.

Despite the availability of numerous detectors, also known as classifiers, that can assess work for its genuineness, they do not always provide certainty, and there still may be cases where AI-generated content goes through entirely undetected. But maybe the issue of ChatGPT’s use in academic dishonesty actually shines a light on the broader problem within the education system itself, namely: why are students willing to cheat and use ChatGPT to do their work for them in the first place?

Critical thinking

One of the aforementioned professors who caught their students, Professor Darren Hick of Furman University, noticed that while one of his student’s essays was well written, it contained some glaring errors that they wouldn’t have realistically made themselves.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

This grabbed Professor Hick’s attention, so he decided to run their work through OpenAI’s own classifier, which gives a percentage score as to the likelihood of a piece of content being the work of an AI.

In this case, a score of 99% came back, and after Hick confronted the student, they confessed to cheating.

Perhaps this situation says equally as much about the curriculum as it does about the student’s moral character. You could argue that there will always be those looking to avoid applying themselves and cheat the system where they can. But if the curriculum fails to engage students in a way that makes them willing to do their own work, then perhaps they aren’t entirely to blame.

It is perhaps more understandable at high school level, where students have no choice but to learn the subjects they are given, rather than what they may necessarily want to learn about. Learning by rote is the main method of teaching worldwide, regardless of whether or not the teacher in question wants to teach this way, so it's hardly surprising students are so disenchanted to the point of delegating their entire workloads to a machine.

This kind of education that would get students actually interested in learning was perhaps crystalized best during the 18th century “Age of Enlightenment”. As new discoveries and understandings were dawning in the sciences and other fields of inquiry, thinkers started to emphasize the importance of education, and how best to endow future generations with the skills and knowledge required to live a fulfilled life and contribute meaningfully to society, both culturally as well as economically.

The educational values espoused included the encouragement of critical and creative thinking, stimulating student’s minds by setting tasks that would let them think independently, yet still be guided by the helping hand of the teacher, rather than thinking of the student’s mind as an empty vessel waiting to be stuffed with facts, with no explanation given as to why they are being forced to retain what they are being told in the classroom.

If education today followed such Enlightenment values, then maybe we wouldn’t be facing issues with AI-writers in education, as it wouldn't even cross the minds of students to use them - who would if assignments allowed for the pursuit of individual interests and the reaping of internal rewards resulting from the contribution of independent insights?

One-trick buffalo

As it stands, when students are given assignments that ask for their own input, some students don’t see the value in doing their own work, and instead resort to using AI like ChatGPT. But how well can it actually do this kind of work for them?

If you ask ChatGPT point blank for an opinion, it tells you very clearly that it has no opinions of its own, and instead gives commonly known interpretations on subjective matters. But are there other ways to try and coax out some kind of semblance of an original opinion?

Since ChatGPT is a large language model, its understanding of the English language and all its rules is pretty thorough, so let's give it a real semantic workout and feed it a well known sentence in the field of linguistics, designed to illustrate the complexity and ambiguities inherent in language. That sentence is ‘Buffalo buffalo Buffalo buffalo buffalo buffalo Buffalo buffalo.’

For those who have not come across this example before, it is a grammatically correct sentence, employing three distinct meanings of the word ‘buffalo’: the animal, the city in New York, and the verb meaning to bully. The sentence can be parsed in various ways, but one common meaning is: ‘Bison from Buffalo, that other Bison from Buffalo bully, also bully other Bison from Buffalo.’

But rather than simply submitting this sentence to ChatGPT, let's apply it in the form of a question: ‘Is it true that Buffalo buffalo Buffalo buffalo buffalo buffalo Buffalo buffalo?’

Its response: “Yes, it is true. The sentence is a well-known example of how homonyms and homophones can be used to create complex and difficult-to-understand sentences in the English language. The sentence is grammatically correct.”

So at least it did work out that it is an actual sentence, rather than assuming that the animal’s name was just being said eight times. However, by responding confidently with “Yes it is true”, it is saying that the actual content of that sentence is true, when it's not exactly something we could ever verify - maybe some bison in New York state do bully those who themselves are also bullied. But confidently stating the actual statement is true is misleading since there is of course no objective way to verify the claim.

Because it was a well known phrase in linguistics, and clearly one ChatGPT knows about, it simply regurgitates the facts about the sentence, which you can ascertain from a simple internet search for that matter, so it isn’t applying knowledge in an intelligent way; it only gives the illusion that it is. It doesn't quite understand questions that are more nuanced, in a way a student easily would.

Fruit of the forbidden tree

But if the assignment is to write a poem for a creative writing assessment, then can ChatGPT help out the devious student here? Yes, it can - but again, only to a certain extent. It has already impressed with examples of its creative expression, such as explaining Einstein's theory of relativity in the form of a poem, for instance.

It of course knows all the main forms of poetry, able to construct verse competently in popular styles, such as haikus and iambic pentameter. But what about something more tricky - what about in the style of John Milton's Paradise Lost?

With the prompt ‘Write a short poem in the style of Paradise Lost’, this is the first verse that ChatGPT came up with:

In Eden's bowers, where all was bright,

Man walked with God in perfect light.

But Satan, with his envy and pride,

Led man astray, from grace aside.

It looks impressive, even inserting an example of the famous Miltonic syntax in the last line, by inverting the typical ordering of words you would expect in the normal course of the English language. But there is one big problem: Paradise Lost - famously - doesn't rhyme. If a student's knowledge of Milton’s style in his epic poem were being assessed, then they would surely get a big markdown for this error.

However, what is equally troublesome about ChatGPT is its inconsistency. When asked again to ape the style of Milton’s classic, it rightly chose blank verse. So if you're using the system in your poetry assignment, you might want to think twice.

Classifiers

Errors such as these may lead educators, as Professor Hick did, to use a detector to confirm their suspicions. And there are quite a few to choose from at the moment, and almost certainly more will be on their way.

Popular essay submission portal Turnitin is developing its own detector, and Hive claims that its service is more accurate than others on the market, including OpenAI’s very own, and some independent testers have agreed. It also claims to throw up less false positives, which Hive seems to be particularly concerned about.

And for good reason. Because no matter how accurate a detector is, what if a false positive does occur? How would you verify otherwise? With plagiarism, you can find the original source. With ChatGPT you can’t, since the prompt generates a novel response every time, and can change quite significantly with just a few minor adjustments to the phrasing of a prompt. And if students write generically enough - which is entirely possible given the generic nature of most assignments - then it can make the detector uncertain.

Professor Hick had the same worry, arguing that even with a 99% likelihood of work being written by AI, the evidence only ever remains circumstantial, not material; he still felt the need to get an actual confession from the student in question before he could fail their work.

The flipside of less false positives is that more AI content slips through the net, so this doesn't help with the accuracy of the classifier. Reducing false positives does not improve accuracy - it just shifts its inaccuracies to the less egregious end of the spectrum.

Also, what’s to stop a student from getting ChatGPT to write their work, then tweak it slightly until it no longer gets flagged by a classifier? This does take some effort, but a student may still find this preferable to writing an entire assignment themselves. And all they’ve really learnt in the process is how a detector is able to distinguish between AI and human writers.

The differences in accuracy between various classifiers might be somewhat of a moot point. No matter what score came back from the classifier, the teacher would still need to verify. If one classifier is more accurate than another, is the difference really going to be that one says 0% and the other says 99%? They all seem to be able to act as a rough guide, and as we’ve already seen from the likes of the aforementioned professors, educators seem to be pretty good at detecting AI work by themselves - a classifier, at best, just confirms what they already know.

Another way to solve the problem

As classifiers develop, though, AI content may get easier to detect. The problem is, the AI themselves, like ChaGPT, will continue to advance too, perhaps resulting in a war of escalation between the two. Students may still be able to make use of both of them to their own end.

So maybe the answer lies in looking at why students actually use it in the first place, and why they feel so disaffected from their work that they get a chatbot to do it for them. If the curriculum engaged them in a meaningful way, then they wouldn’t want to use AI to cheat. Detectors wouldn’t be needed if the work is interesting and encourages the contribution of their own insights. What’s more, this kind of work seems to be beyond the capability of AI anyway.

According to Dr Leah Henrickson, the University of Leeds where she teaches has started to realize this, and is adjusting its courses to elicit more critical thinking from their students, which will hopefully discourage AI being misused for academic dishonesty.

Dr Hendrikson does point out, though, that ChatGPT may be able to help legitimately with more basic functions, such as assisting with writing in English for those who do not speak it natively. But to prevent it from overstepping acceptable boundaries in education, maybe a change to the way we educate is a more effective and worthwhile solution.

Perhaps what’s needed instead are assignments for students that ask them to pursue their own interests, whilst at the same time ensuring they are worthwhile endeavors to lead to a better educational outcome for all involved. This will hopefully prevent the temptation to cheat using AI.

Moreover, such assignments can’t be effectively mimicked by AI, as we have seen, due to their strange errors and absence of original thought. When they try, they are exposed by educators who can spot their use instantly. Once a teacher knows the styles and minds of their individual students, with all their idiosyncrasies, it becomes obvious when AI takes their place. But this can only be determined if assignments are set that align with the values of a good education: stimulating students to learn and bring their own insights to bear on the topics that inspire them.

Lewis Maddison is a Reviews Writer for TechRadar. He previously worked as a Staff Writer for our business section, TechRadar Pro, where he gained experience with productivity-enhancing hardware, ranging from keyboards to standing desks. His area of expertise lies in computer peripherals and audio hardware, having spent over a decade exploring the murky depths of both PC building and music production. He also revels in picking up on the finest details and niggles that ultimately make a big difference to the user experience.