All you need to know about Automata, Micron's revolutionary processor

A CPU in the form of a memory module

Late last year, Micron, a US-based company better known for its memory and storage products, introduced a new processor called Automata which promised to introduce a new paradigm when it comes to compute.

TechRadar Pro interviewed Paul Dlugosch, Director, Automata Processing Technology at Micron, to find out more about what Automata means for the rest of us and shed more light on how conventional processing architectures compare. This is the first of a 2-series interview that look more closely at Automata.

TR Pro: For a starter what is Automata and how does it differ from existing CPU architectures?

The Automata Processor (AP) is a programmable silicon device capable of performing very high-speed, comprehensive search and analysis of complex, unstructured data streams. The AP enhances traditional computing architectures by providing an alternate processing engine that has the ability to efficiently address unstructured problems and engage vast datasets. The AP is the industry's first non-Von Neumann computing architecture and leverages the parallelism found in DRAM technology.

TR Pro: Does its production require a lot of retooling?

No, actually the AP chip is being fabricated at our existing 300mm DRAM facility in Manassas Virginia.

TR Pro: What investment or retraining does it require from a developer's perspective?

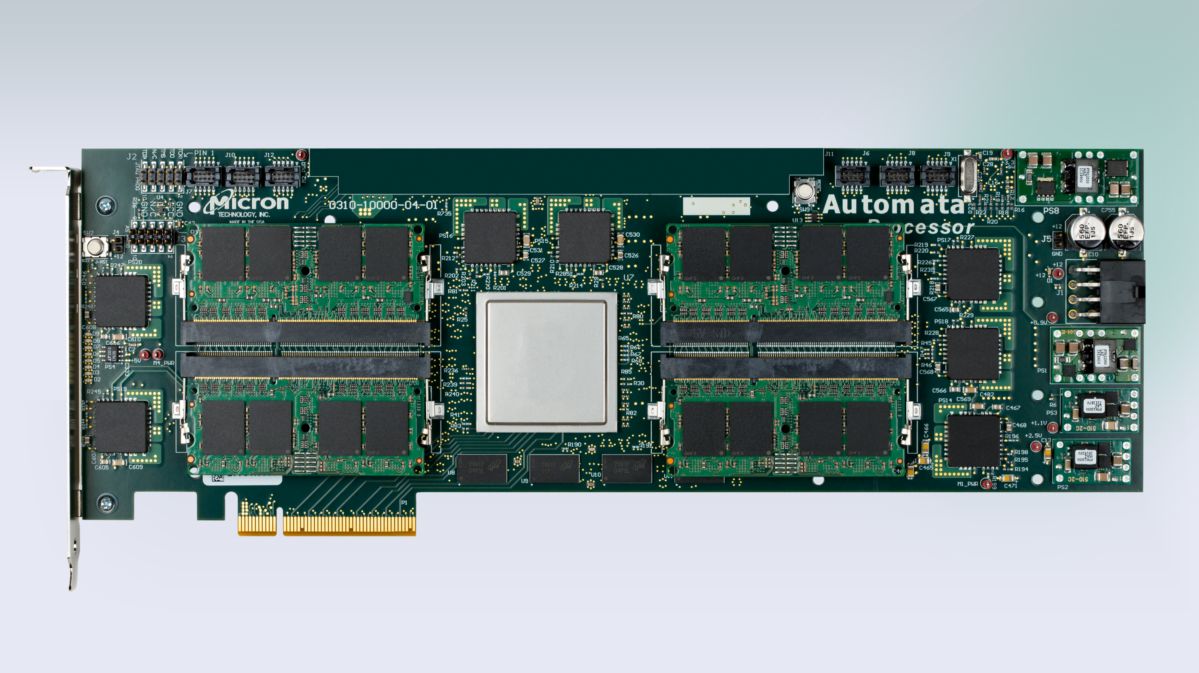

The AP uses a DDR3-like memory interface chosen to simplify the physical/design-in process for system integrators. The AP will be made available as single components or as DIMM modules, enhancing the integration process.

Additionally, Micron has developed a graphically-based design tool, the AP Workbench, an SDK and a PCIe board populated with automata processor DIMMs that will allow end users to develop and test applications on the AP.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

TR Pro: When will the first engineering samples be available? When will mass availability be reached?

Summer 2014 is when we expect to ship our first hardware samples and make the develop toolset available.

TR Pro: What would prevent other memory manufacturers from emulating what Micron did?

It is not impossible, but unlikely. The AP has been several years in development and Micron owns many patents on the technology.

TR Pro: What's the next step, a 3D version of Automata? Hybrid solutions (e.g. on chip version?)

The next steps for the AP are being developed right now and will be based largely on the feedback we get from the first generation devices. 3D integration is one possibility. Expanding the capacity of on-chip routing capacity is another possible area of development.

The overall capability of the base processing fabric can be improved and extended in a number of ways and Micron will be considering these opportunities in conjunction with the definition of subsequent generations of the Automata Processor architecture.

TR Pro: The press release mentions millions of tiny processors. How can you cram so many processors in a tiny space?

Unlike a conventional CPU, the AP is a scalable, two-dimensional fabric comprised of thousands to millions of interconnected processing elements, each programmed to perform a targeted task or operation.

Whereas conventional parallelism consists of a single instruction applied to many chunks of data, the AP focuses a vast number of instructions at a targeted problem, thereby delivering unprecedented performance.

TR Pro: How would Automata work as part of a Heterogeneous System?

To be clear, the Automata Processor is not a standalone processor. The first generation Automata Processor requires some type of CPU in the system. This CPU is required to manage data streams flowing into and from the Automata Processor array. Keep in mind that the first generation Automata Processor uses a physical interface based on the DDR3 protocol specification.

This means that the way an Automata Processor interfaces to a 'controller' is very similar to how a DDR3 memory component (or DIMM module) would interface to a CPU. The Automata Processor array must have information streamed to it (DDR3 writes) and periodically, the Automata Processor must provide analytic results (DDR3 reads). In a complete system, some type of CPU/NPU or perhaps FPGA must implement these read write operations.

This, however, isn't really the true essence of heterogeneous computing. The most meaningful definition of heterogeneous computing involves different types of processors being used in the actual computational process (ie. more than just data movement). The classical example that is often encountered is a heterogeneous environment comprised of traditional (x86) CPUs and GPGPUs.

In many instances of this type of heterogeneous computing, both the CPU and GPGPU are tasked with jobs that are uniquely aligned with their intrinsic processing capability. The same situation exists when considering the Automata Processor. The AP provides a unique value to system architects in its ability to implement massive thread parallelism (individual automatons) in the processing fabric.

This parallelism can be used to solve difficult problems in a variety of applications, but often these applications require other types of processing that the Automata Processor may not be well suited for. For example, consider the application of video analytics where pattern recognition if often a critical part of the processing pipeline. The Automata Processor would may provide an ideal solution for this part of the processing pipeline.

Video analytics, however, often involves floating point operations to be performed on the video (pixel) stream. In this case, a CPU or GPU might be tasked with the floating point operations while the Automata Processor is used for massively parallel pattern recognition. You can think of this as a CPU/GPGPU performing pre and post processing of the information fed to and from the Automata Processor.

Désiré has been musing and writing about technology during a career spanning four decades. He dabbled in website builders and web hosting when DHTML and frames were in vogue and started narrating about the impact of technology on society just before the start of the Y2K hysteria at the turn of the last millennium.