A new hybrid approach to photorealistic neural avatars

Generative AI live photorealistic avatars

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Neural avatars have emerged as a new technology for interactive remote presence. The technology is set to influence applications across video conferencing, mixed-reality frameworks, gaming, and the metaverse.

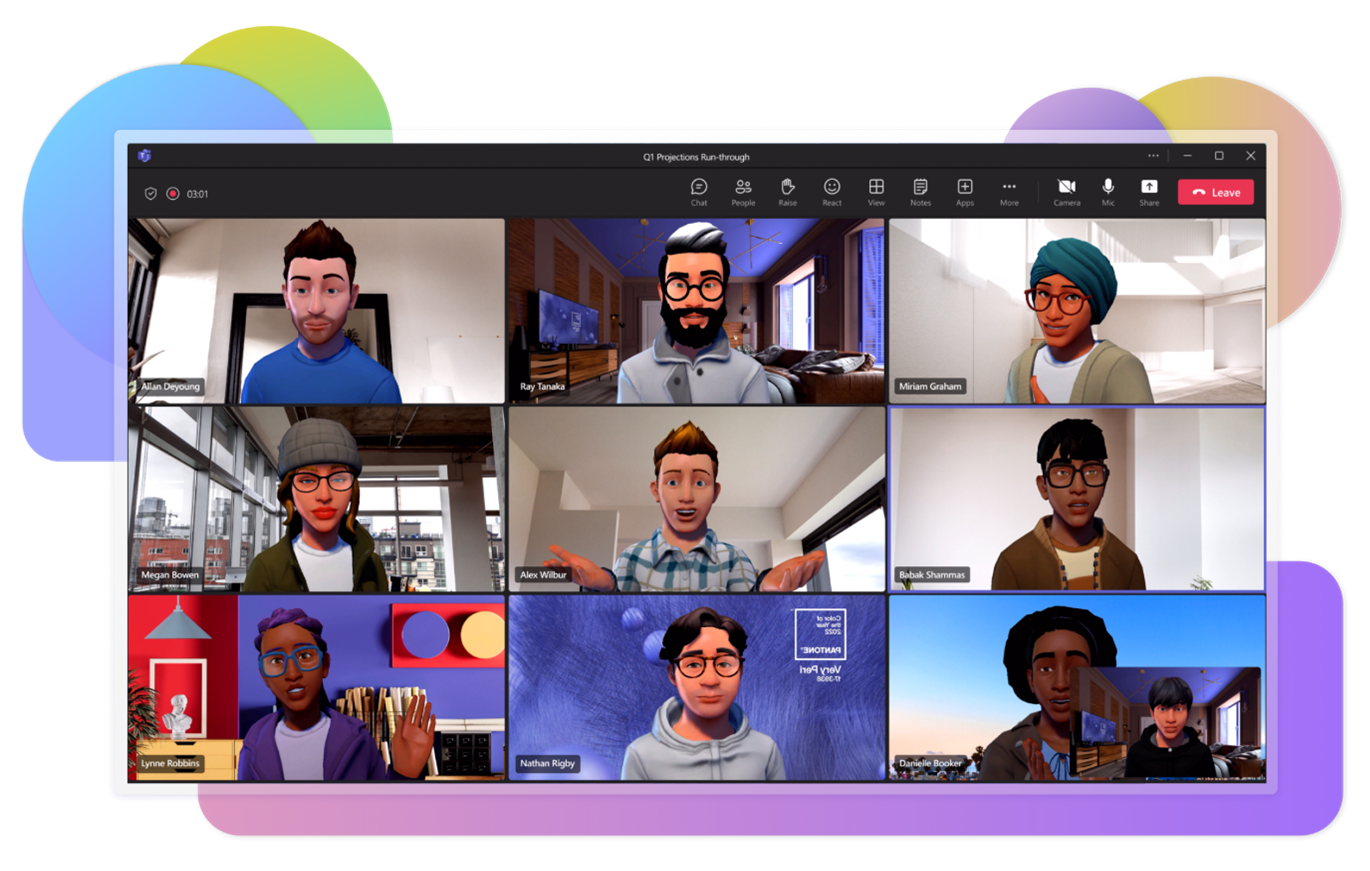

However, at present the technology can feel limited. We see cartoon representations of virtual speakers, as seen in Mesh avatars for Microsoft Teams, and experimental prototypes of photorealistic, neural renderings, like NVIDIA Maxine video compression and Meta pixel codec avatars.

In both categories, restrictions in rendering a speaker’s intricate expressions, gestures and body movements severely limit the value of remote presence and visual communication. It’s widely accepted that the majority of human communication is non-verbal. We evolved to be very astute observers of human faces and body movements. We use delicate expressions, hand gestures, and body language to convey trust and meaning, or interpret human emotion and experiences. All these details matter. So, when poor rendering doesn’t reflect reality, many users may simply prefer not to use any video at all.

The challenge, then, is to identify a new way to introduce advanced AI tools to facilitate genuine, engaging remote presence applications.

After almost two years of research, deep-tech video delivery company iSize observed that all 2D and 3D photorealistic neural rendering frameworks for human speakers that allow for substantial compression - in other words, 2x-plus compression versus conventional video codecs - cannot guarantee faithful representation of features like eyes, mouth, or certain expressions and gestures.

Due to the enormous diversity in facial features and expressions, the firm estimated it would take 1000x more complexity in neural rendering to get such intricate details right. And even then, there’s no guarantee the result will not have uncanny valley artifacts when deployed at scale for millions of users.

Generative AI and photorealistic avatars: How does it work?

At the GTC conference 2023, the company presented the latest generative AI live photorealistic avatar, delivering a photorealistic experience while maintaining a lower bitrate compared to standard video codecs. The substantial bitrate reduction leads to 5x lower video latency and transceiver power, uninterrupted remote presence under poor wireless signal conditions, and significantly better Quality of Experience. It could also mean increased user engagement time for gaming and metaverse applications.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

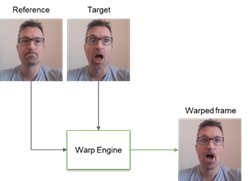

A custom pre-trained neural warp engine is still being used, but it is actually made even simpler and faster than other similar approaches. At the encoder side, such a neural engine will generate a general representation for the target video frame of the speaker (starting from the reference) with warp information that can be very compact, i.e., around 200 bits/frame. Naturally, the uncanny valley issues can creep in around the mouth and eyes regions, as seen in Fig. 1.

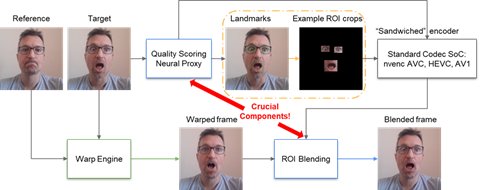

However, what happens next is that a quality scoring neural proxy assesses the expected warp quality prior to it actually happening. It then makes the following decisions:

Either it determines the warp will fail in a major way and the frame is sent to a ‘sandwiched’ encoding engine like an HEVC or AV1 encoder.

Or it will only extract select regions of interest components like the eyes and mouth, only sending these components to the sandwiched encoder.

Then, there is a region-of-interest (ROI) blending engine, which seamlessly blends the ROI components together with the warp outcome. The stitched result is displayed.

The output avoids all uncanny valley problems. This framework is termed BitGen2D, as it bridges the gap between a generative avatar engine and a conventional video encoder. It’s a hybrid approach that offloads all the difficult parts to the external encoder, while leaving the bulk of the frame to be warped by the neural engine.

The crucial components of this framework are the quality scoring proxy and the ROI blending. BitGen2D doesn’t have to score the quality after the blending, and this is a deliberate choice, as, if it’s done a posteriori, this increases the delay.

That is, when a failure is detected, the system would have to unroll the result and send the frame to the video encoder. Getting the quality scoring to work well in conjunction with the warp and blending is key. Furthermore, deep fakes cannot be created as the framework is operating directly from elements of the speaker's face.

BitGen2D provides high reliability, accurately rendering the various events that can happen during a video call, like hand movements and foreign objects entering the frame. It’s also user-agnostic since it doesn’t require any fine-tuning on a specific identity. This enables BitGen2D as plug-and-play for any user. It can also withstand situations like alterations in appearance, such as a change of clothes, accessories, or haircut, or a switch in speaker during a live call. The method is actively being extended to 3D data using NERFs and octrees.

During the GTC Developer conference keynote address, NVIDIA CEO Jensen Huang said, “Generative AI’s incredible potential is inspiring virtually every industry to reimagine its business strategies and the technology required to achieve them.”

AI solutions, then, are here to stay and are already transforming how we live, work and play in both physical and virtual spaces.

- Best webinar software: Top picks for online collaboration, presentations, and events

Yiannis Andreopoulos, CTO, iSize.