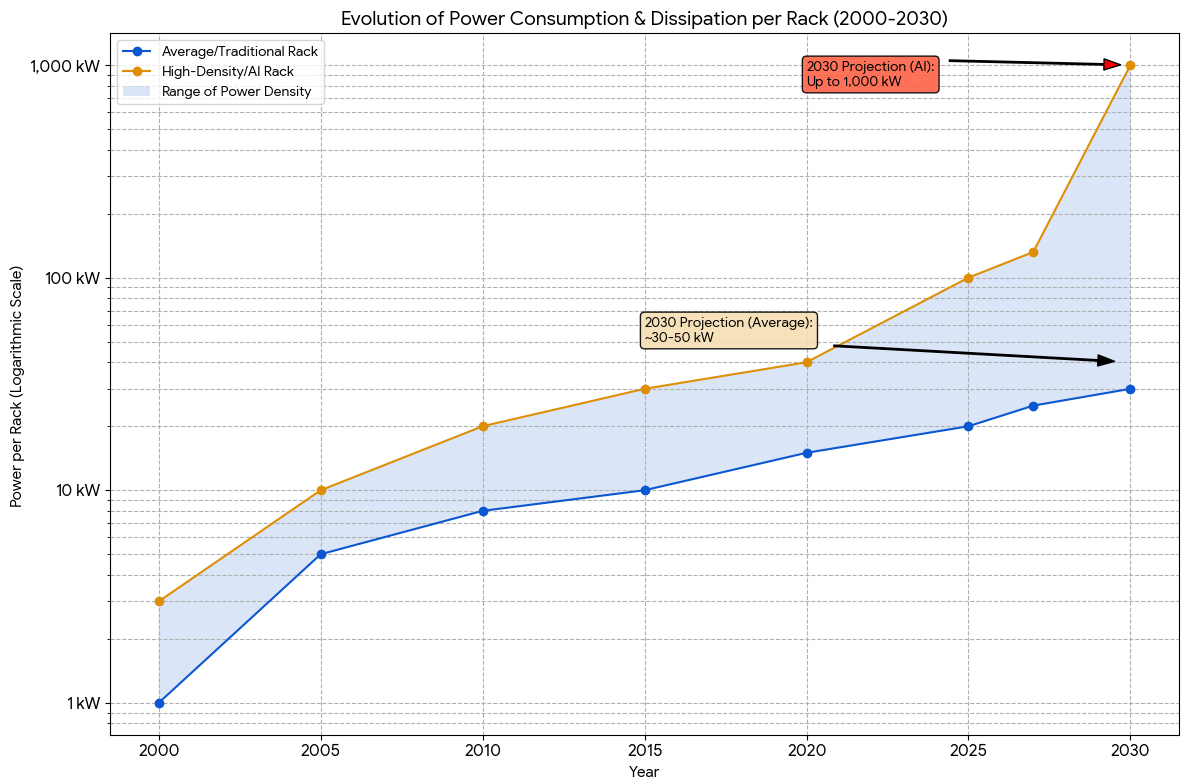

This graph alone shows how global AI power consumption is getting out of hand very quickly - and it's not just about hyperscalers or OpenAI

AI racks could consume 20 to 30 times the energy of traditional racks by 2030

- AI-focused racks are projected to consume up to 1MW each by 2030

- Average racks expected to rise steadily to 30-50kW within the same period

- Cooling and power distribution becoming strategic priorities for future data centers

Long considered the basic unit of a data center, the rack is being reshaped by the rise of AI, and a new graph (above) from Lennox Data Centre Solutions shows how quickly this change is unfolding.

Where they once consumed only a few kilowatts, projections from the firm suggest by 2030 an AI-focused rack could reach 1MW of power use, a scale that was once reserved for entire facilities.

Average data center racks are expected to reach 30-50kW in the same period, reflecting a steady climb in compute density, and the contrast with AI workloads is striking.

New demands for power delivery and cooling

According to projections, a single AI rack can use 20 to 30 times the energy of its general-purpose counterpart, creating new demands for power delivery and cooling infrastructure.

Ted Pulfer, director at Lennox Data Centre Solutions, said cooling has become central to the industry.

“Cooling, once ‘part of’ the supporting infrastructure, has now moved to the forefront of the conversation, driven by increasing compute densities, AI workloads and growing interest in approaches such as liquid cooling,” he said.

Pulfer described the level of industry collaboration now taking place. “Manufacturers, engineers and end users are all working more closely than ever, sharing insights and experimenting together both in the lab and in real-world deployments. This hands-on cooperation is helping to tackle some of the most complex cooling challenges we’ve faced,” he said.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

The aim of delivering 1MW of power to a rack is also reshaping how systems are built.

“Instead of traditional lower-voltage AC, the industry is moving towards high-voltage DC, such as +/-400V. This reduces power loss and cable size,” Pulfer explained.

“Cooling is handled by facility ‘central’ CDUs which manage the liquid flow to rack manifolds. From there, the fluid is delivered to individual cold plates mounted directly on the servers’ hottest components.”

Most data centers today rely on cold plates, but the approach has limits. Microsoft has been testing microfluidics, where tiny grooves are etched into the back of the chip itself, allowing coolant to flow directly across the silicon.

In early trials, this removed heat up to three times more effectively than cold plates, depending on workload, and reduced GPU temperature rise by 65%.

By combining this design with AI that maps hotspots across the chip, Microsoft was able to direct coolant with greater precision.

Although hyperscalers could dominate this space, Pulfer believes that smaller operators still have room to compete.

“At times, the volume of orders moving through factories can create delivery bottlenecks, which opens the door for others to step in and add value. In this fast-paced market, agility and innovation continue to be key strengths across the industry,” he said.

What is clear is that power and heat rejection are now central issues, no longer secondary to compute performance.

As Pulfer puts it, “Heat rejection is essential to keeping the world’s digital foundations running smoothly, reliably and sustainably.”

By the end of the decade, the shape and scale of the rack itself may determine the future of digital infrastructure.

You might also like

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.