Nvidia GTC 2022 keynote: all the latest news, releases and more

Nvidia GTC 2022 keynote has wrapped, so here's all the news

Nvidia GTC 2022 is underway, with the computing giant announcing a raft of software and hardware news, upgrades and releases.

CEO Jensen Huang had a whole host of new additions to run through during his keynote, including new data center chips, AI advancements and a deep dive into the Omniverse.

So if you missed anything, read on for all the latest updates from the Nvidia GTC 2022 keynote.

As is tradition, Nvidia kicked off the keynote with a demonstration of how advanced its AI capability is.

The keynote started with a sweeping dive through a digital replica of the company's HQ, with music also composed by its AI.

CEO Jensen Huang then appeared, clad in trademark leather jacket, introducing a video of AI use cases, from healthcare to construction, for the company's traditional "I am AI" presentation.

Jensen went on to discuss how Nvidia's AI platform is a common sight around the world, with three million developers and 10,000 start-ups using the technology.

Huang noted that in the past decade, Nvidia's hardware and software has delivered a million-x speed increase in AI technology.

Next was a refresh look at Nvidia's "Earth 2" digital twin, which among other things is using in-depth weather forecasting data to help simulate climate and atmosphere fields.

The models this data creates could be a vital step towards dealing with climate change, Nvidia notes, including predicting storms, flooding and other natural disasters.

Now it's on to hardware - this is where Nvidia really leads the way.

First up is a look at transformers, where Huang notes that the technology is ideal for machine learning platforms such as natural language processing, making live translation a reality for users everywhere.

But when it comes to the next step, "the conditions are primed for the next breakthroughs in science" Huang says, with virtual models, quantum computing and even media set for transformation.

"AI is racing forward in every direction," he says.

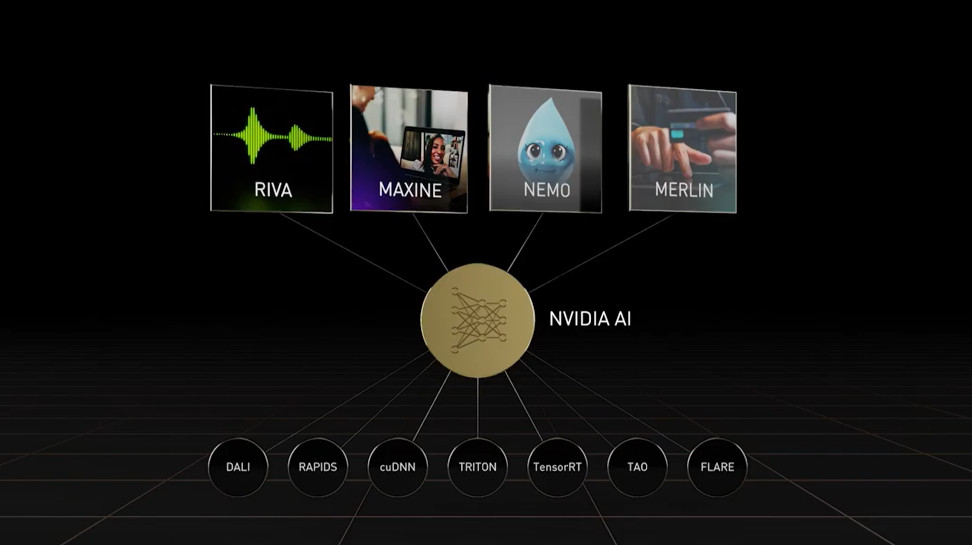

Nvidia's AI libraries sweep through the whole technology landscape, Huang notes, highlighted Riva, Maxine, Nemo and Merlin among the headline acts.

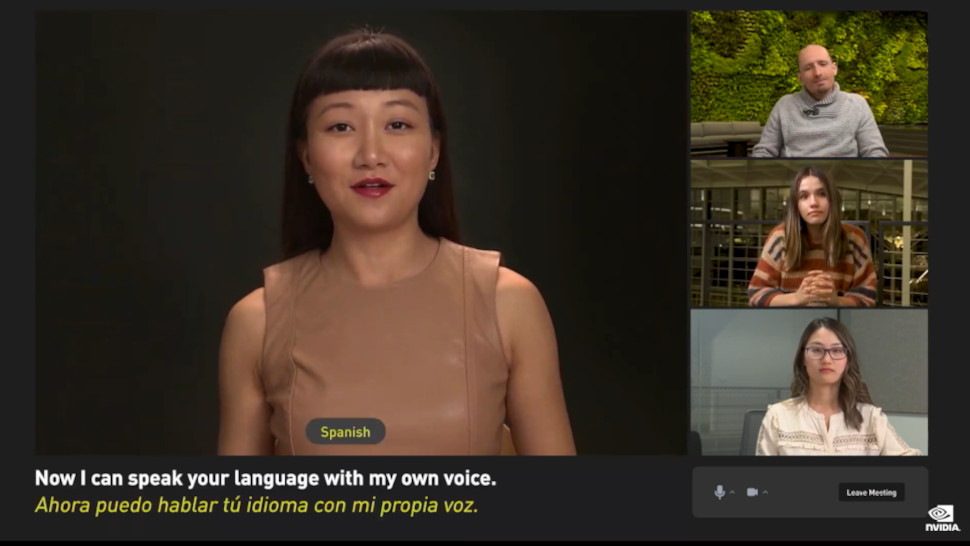

"AI will reinvent video conferencing", Huang says, introducing a demo of Maxine, the company's library for AI video calls.

It's certainly pretty incredible to see, with the library able to re-focus a speaker's eyes so that they are central, and then giving them the superpower to suddenly be able to speak another language.

However there is a little uncanny valley-vibe to some of the speaking in a foreign langauge, with the mouth seeming *just* a little off to us...

We also get a look at Merlin 1.0, an AI framework for hyperscale recommendation systems, and Nemo Megatron, which is used for training large language models.

Now it's time for some new hardware!

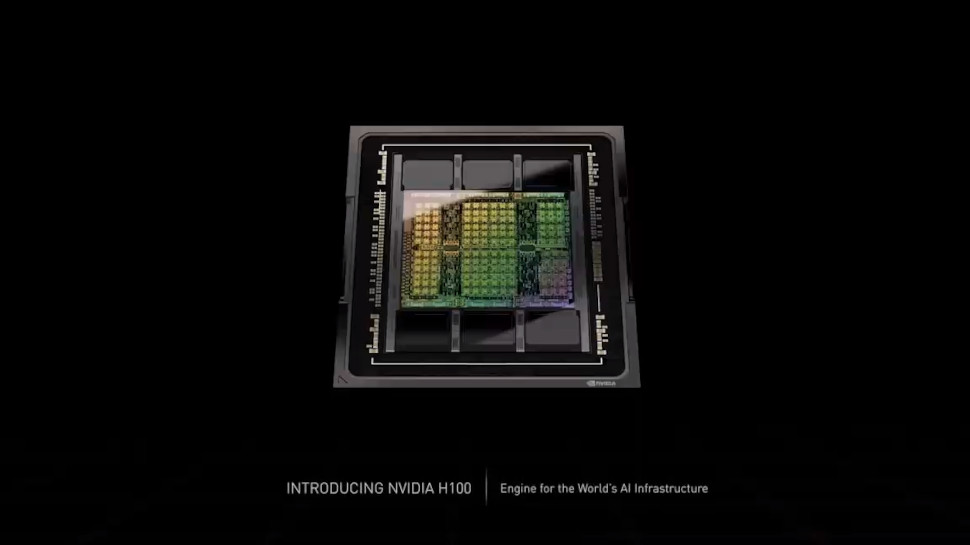

The first major hardware announcement is the Nvidia H100, a brand new Tensor Core GPU for accelerating AI workloads.

Based on TSMC's 4N process, it boasts a whopping 80 billion transistors and 4.9TB/s bandwidth, and delivers 60 TFLOPs of FP64 performance.

The H100 is built on a brand new GPU architecture from Nvidia called Hopper, the successor to Ampere.

The Hopper architecture will underpin the next generation of data center GPUs from Nvidia, delivering an “order of magnitude performance leap over its predecessor”.

Huang also announced a new iteration of the NVIDIA DGX system, each of which packs eight H100 GPUs.

“AI has fundamentally changed what software can do and how it is produced. Companies revolutionizing their industries with AI realize the importance of their AI infrastructure,” he said.

“Our new DGX H100 systems will power enterprise AI factories to refine data into our most valuable resource — intelligence.”

Nvidia says it can also connect up to 32 DGXs (containing 256 H100 GPUs in total) with its NVLink technology, creating a “DGX Pod” that hits up to one EXAFLOPs of AI performance.

And it gets better. Multiple DGX Pods can be connected together to create DGS Superpods, which Huang described as “modern AI factories”.

A brand new supercomputer developed by Nvidia, named Eos, will feature 18 DGX Pods. Apparently, it will achieve 4x the AI processing of the world’s current most powerful supercomputer, Fugaku.

The company expects Eos to be online within the next few months, and for it to be the fastest AI computer in the world, as well as the blueprint for advanced AI infrastrucutre for NVIDIA's hardware partners.

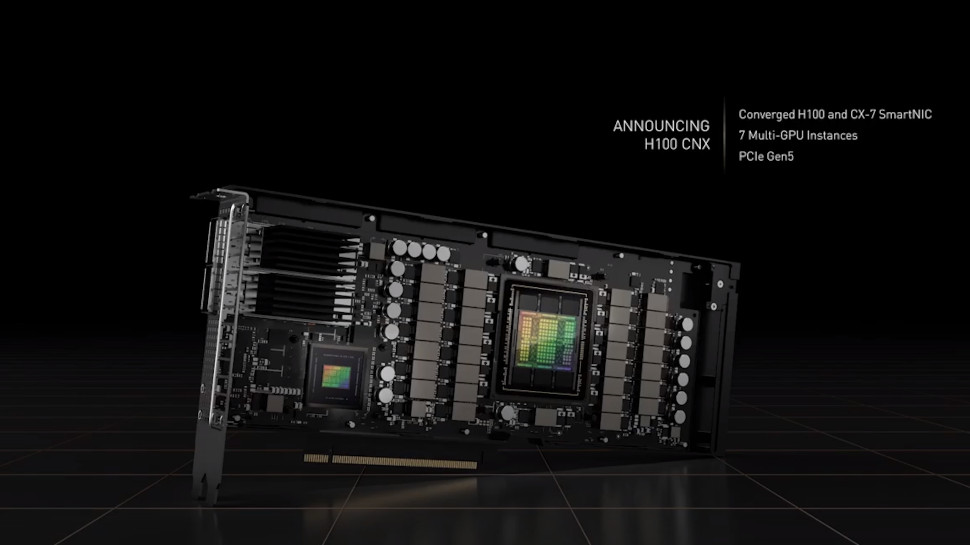

To help avoid traditional networking bottlenecks, Nvidia has also combined an H100 GPU and CX-7 SmartNIC, the most advanced networking processor.

The Nvidia H100 CNX “avoids bandwidth bottlenecks, while freeing the CPU and system memory to process other parts of the application”, Huang explained.

Huang then provided an update on Grace, Nvidia’s first ever data center CPU, purpose-built for AI.

He says the new Arm-based processor is “progressing fantastically” and should be ready to hit the shelves next year.

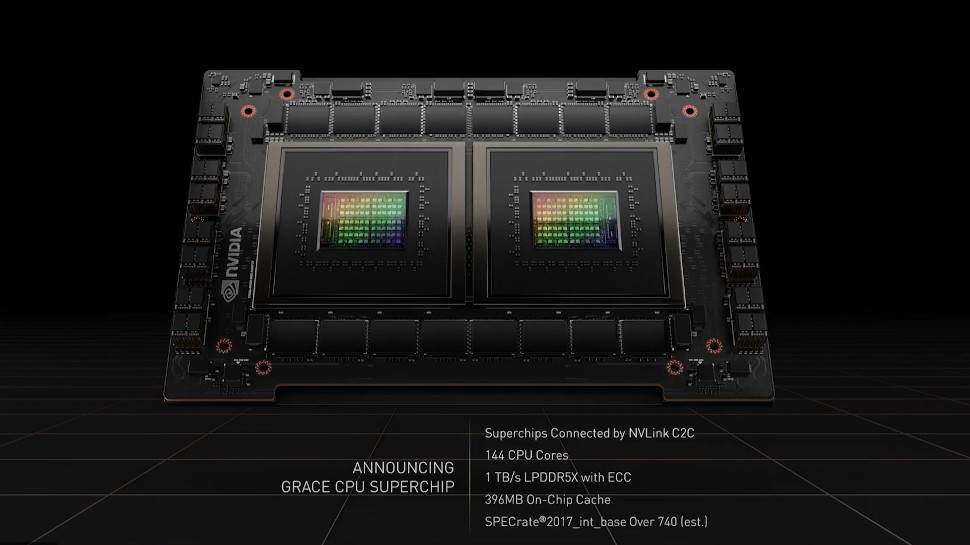

Grace will feature in two new mega multi-chip modules (MCMs) from Nvidia: Grace Hopper and the Grace CPU Superchip.

The former combines a Grace CPU and Hopper-based GPU, while the latter connects up two Grace CPUs together.

After a comprehensive sweep through a host of other AI models and libraries, plus a quick stop to mention the famous Apollo 13 mission (which is where the term "digital twin" was first coined) it's on to something a little different - the Omniverse.

Nvidia has big goals with the Omniverse, its platform for 3D design and simulation, and is certainly throwing a lot of weight behind it.

The company is using GTC as a launchpad for a bunch of new tools and resources for Omniverse, including a framework for building fully-animated digital avatars.

There’s a lot here to unpack, but companies could use Omniverse to create digital twins of their facilities for the purposes of safety optimization, or to launch simulations to assist robotics development.

All these new programs require a lot of power, Nvidia says, and luckily it has just the thing.

The new NVIDIA OVX Server is powered by 8 Arm A40s and dual Ice Lake CPUs, and is ideally set to support the huge computing needs created by the ominverse.

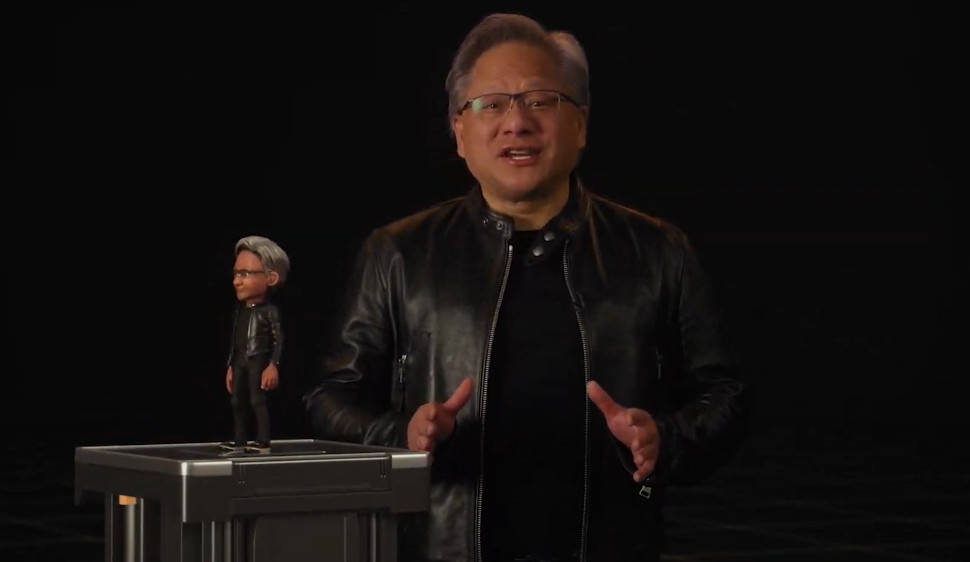

After showing off a slightly nightmarish "Toy Jensen" AI avatar which uses AI to run almost independently, it's on to driving and autonomous vehicles.

Nvidia has been investing a lot into autonomous driving in recent years, and wants to keep that trend going.

The company showed a video of its updated Drive platform steering a car on live roads, noting that it now shows the intent of where it will be going next, and will also keep an eye on the driver too.

Nvidia also announced Hyperion 9, the next generation of its self-driving car platform, which will launch in cars shipping in 2026.

In order to help guide these vehicles, Nvidia says that it expects to map all major highways in North America, Europe, and Asia by the end of 2024 - with all of this data also able to be uploaded into the Omniverse for building simulations and training.

Then it's on to a few more use cases for the Omniverse, including in healthcare, factory design, and even training your next generation of robots.

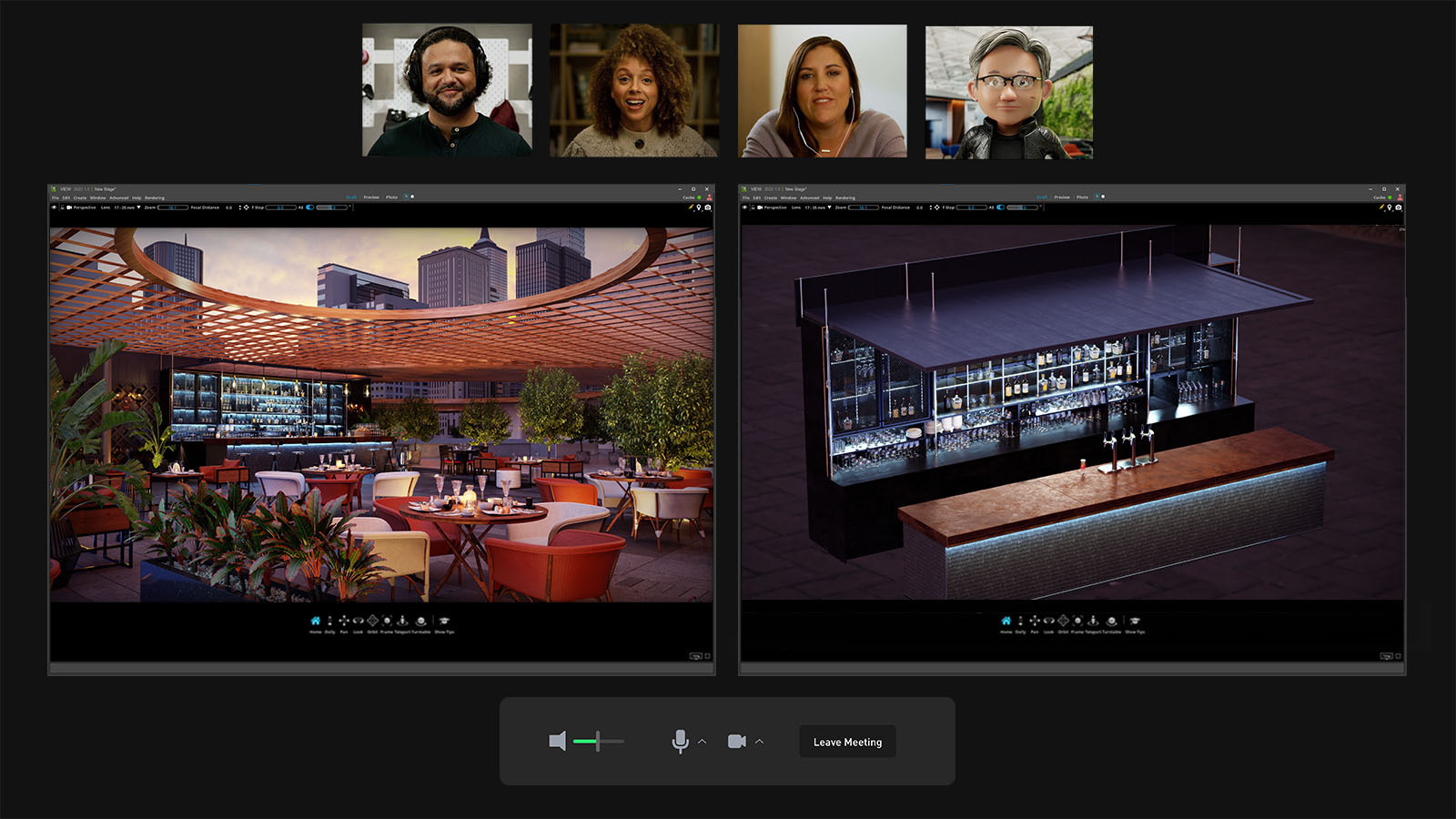

Then one last major announcement. Huang rounded out the show by announcing Omniverse Cloud, a new service designed to facilitate real-time 3D design collaboration between creatives and engineers.

Omniverse Cloud supposedly cuts through the complexity created by the need for multiple designers to work together across a variety of different tools and from a variety of locations.

“We want Omniverse to reach every one of the tens of millions of designers, creators, roboticists and AI researchers,” said Huang.

And that's a wrap from Nvidia GTC 2022!

Huang rounds out the keynote with a whole load of thanks for the company's developers and workers around the world, and ends on a promise for even more cool things to come in the future.

"We will strive for yet another million-x increase within the next decade," he says.

With that, it's back to another AI symphony to play us out.

Thanks for following the live blog, and stay tuned for more Nvidia news here on TechRadar Pro!