The three most important metrics in cloud: latency, latency, latency

Latency is the most important data point

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

When comparing public cloud services such as AWS, Google Cloud Platform, Microsoft Azure, or Linode, you’re likely to notice their specs on IOPS and throughput. These can be impressive numbers, but they’re just a small part of the overall performance factors that need to be considered when choosing the right cloud for your workloads. And they’re not nearly as important as latency.

Boyan Ivanov, CEO, Storpool.

Sadly, you won’t find numbers on latency on the vendor’s battle cards—so we measured it ourselves to see the performance differences among some popular services.

But first, let’s discuss latency. It’s a cloud’s most important metric because it’s the most user-visible: latency directly impacts the time it takes to load a web page or save changes to a selfie.

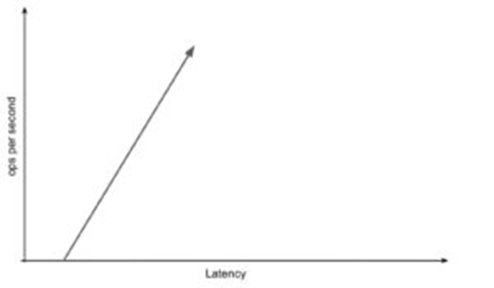

Each workload, system, or application - web server, database, enterprise software, etc. - is a little different, and has distinct characteristics and requirements. However, the basic premise is the same: at a low number of operations per second, latency is low. As operations per second increase, latency increases. Here’s a handy graph showing the relationship:

In a perfect world, increasing operations per second wouldn’t increase latency. Alas, ours is not a perfect world.

Note that this increase is not perfectly linear. At four tasks on four CPU cores, you’ll get the best service: processing time may be one second. But at six tasks, processing takes 1.5 seconds.

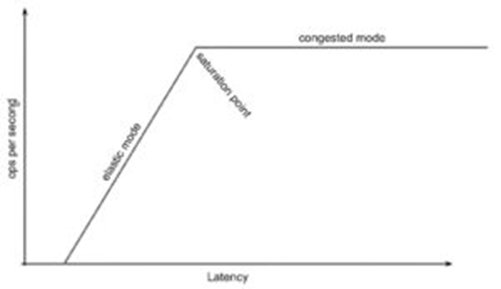

Elastic vs. Congested Mode

In this spectrum of operations processing there are two modes. Let’s call these “elastic mode” and “congested mode.” In elastic mode, you can request a lot of resources from the system, and it delivers efficiently. But there is a point at which the system is saturated with requests, gets choked, and shifts to congested mode. When you pass that saturation point, latency keeps increasing, and loading times increase many times.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

This graph illustrates the saturation point at which elastic mode changes to congested mode:

We want our applications functioning in elastic mode, where latency is minimal and operations are performed fast to maintain responsiveness from the end-user perspective. You might think that the sweet spot is low on the graph—four tasks, four CPU cores, one-second processing time, remember?

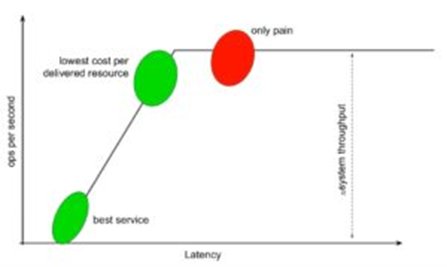

However, the upper end of elastic mode is where you’ll achieve the lowest cost per fulfilled task. You’ll get high ops per second, yet still completely acceptable latency and user experience. In fact, the perfect-world zone for overall economy and efficiency is about 90 percent of the way to the saturation point, as the graph below shows.

Of course, throughput is still a relevant piece of system performance, which the above graph shows as the span between operations per second and the saturation level: better in elastic mode, worse in congested mode. The factors to consider are how high the saturation point is and how many ops per second are processed in that mode. Throughput is generally stated in megabytes per second, or some real-world measurement like database transactions per second (TPS) or pages opened per second.

Measuring Latency

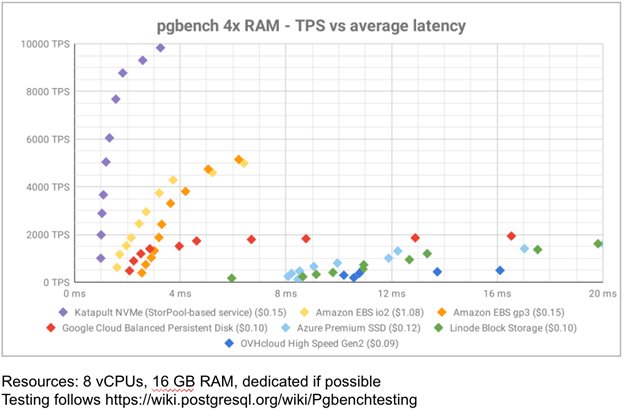

We tested throughput and latency on AWS, GCP, Azure, Linode, OVHcloud, and Katapult to compare apples to apples, we allocated the same amount of memory, same number of vCPUs, used the same methodology, and the same database in each test.

Usually when building a cloud, buyers focus on IOPS and cost, which often leads to disappointing results: once an application is moved to the new cloud, it’s too slow, or costs more than expected…or both. More troubling, in many cases while a few workloads are running in the cloud, IOPS numbers seem satisfactory. However, as the number of workloads increases to hundreds or thousands, congestions happen and the cloud operations team scrambles to resolve application issues and customer complaints.

The popular cloud services providers we mentioned above are selling disks with a certain IOPS spec, but IOPS isn’t everything.

In our benchmarks OVHcloud could not even deliver 1000 TPS, and Linode and Azure, while hovering close, only spiked above 1000 TPS when in-database latency was over 10ms, as you can see in the graph below in blue, green, and teal respectively. The reason for this lies under the hood: two of the services are based on Ceph, a versatile storage software but one known to impose constraints on performance due to its architecture. As for Azure, it’s not known what storage solution they use but it is clearly suffering from similar performance issues. Meanwhile GCP (in red) topped out at 2000 TPS, reaching as high as 1500 TPS in the sub-4ms latency range.

On the other hand, AWS delivered throughput of up to about 5000 TPS at latencies under 8ms; the AWS services were appreciably more capable than those of the other cloud services providers. Finally, Katapult stands out as the clear leader, delivering a database performance of up to 10000 TPS with latencies under 4ms. These results can be obtained at reasonable prices from all of the public cloud providers.

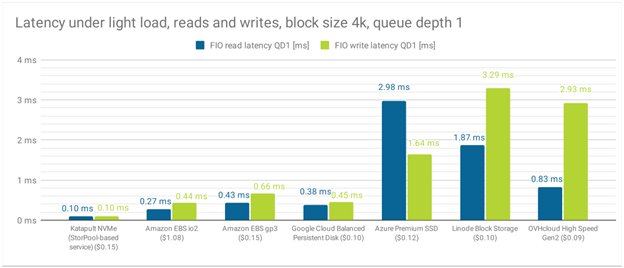

In addition to the typical throughput benchmarking, let’s compare the latency of each service under a light load:

Here you’ll see a vast difference in the services, with Ceph-based clouds showing the worst latency. Just as in the case of throughput, AWS was edged out on latency by Katapult. The graph above also shows the cost of the services we tested. As is clearly visible, in addition to best throughput, the StorPool-powered cloud also had the lowest latency.

The next time you’re cloud-shopping or building a cloud, consider performance beyond the stated initial/upfront IOPS and look deeper at achieving the lowest possible latency, how latency relates to throughput, and how to achieve the lowest cost per operation by staying within elastic mode.

Boyan Ivanov, CEO, Storpool.