How machine learning and image recognition could revolutionise search

Unlocking information from images

Text in documents is easy to search, but there's a lot of information in other formats. Voice recognition turns audio – and video soundtracks – into text you can index and search. But what about the video itself, or other images?

Searching for images on the web would be a lot more accurate if instead of just looking for text on the page or in the caption that suggests a picture is relevant, the search engine could actually recognise what was in the picture. Thanks to machine learning techniques using neural networks and deep learning, that's becoming more achievable.

Caption competition

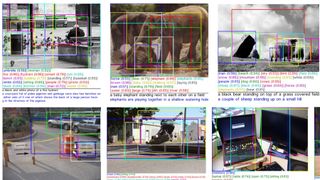

When a team of Microsoft and Facebook researchers created a massive data dump of over 300,000 images with 2.5 million objects labelled by people (called Common Objects in Context), they said all those objects are things a four-year-old child could recognise. So a team of Microsoft researchers working on machine learning decided to see how well their systems could do with the same images – not just recognising them, but breaking them up into different objects, putting a name to each object and writing a caption to describe the whole image.

To measure the results, they asked one set of people to write their own captions and another set to compare the two and say which they preferred.

"That's what the true measure of quality is," explains distinguished scientist John Platt from Microsoft Research. "How good do people think these captions are? 23% of the time they thought ours were at least as good as what people wrote for the caption. That means a quarter of the time that machine has reached as good a level as the human."

Part of the problem was the visual recogniser. Sometimes it would mistake a cat for a dog, or think that long hair was a cat, or decide that there was a football in a photograph of people gesticulating at a sculpture. This is just what a small team was able to build in four months over the summer, and it's the first time they had a labelled a set of images this large to train and test against.

"We can do a better job," Platt says confidently.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Machine strengths

Machine learning already does much better on simple images that only have one thing in the frame. "The systems are getting to be as good as an untrained human," Platt claims. That's testing against a set of pictures called ImageNet, which are labelled to show how they fit into 22,000 different categories.

"That includes some very fine distinctions an untrained human wouldn't know," he explains. "Like Pembroke Welsh corgis and Cardigan Welsh corgis – one of which has a longer tail. A person can look at a series of corgis and learn to tell the difference, but a priori they wouldn't know. If there are objects you're familiar with you can recognise them very easily but if I show you 22,000 strange objects you might get them all mixed up." Humans are wrong about 5% of the time with the ImageNet tests and machine learning systems are down to about 6%.

That means machine learning systems could do better at recognising things like dog breeds or poisonous plants than ordinary people. Another recognition system called Project Adam, that MSR head Peter Lee showed off earlier this year, tries to do that from your phone.

Project Adam

Project Adam was looking at whether you can make image recognition faster by distributing the system across multiple computers rather than running it on a single fast computer (so it can run in the cloud and work with your phone). However, it was trained on images with just one thing in them.

"They ask 'what object is in this image?'" explains Platt. "We broke the image into boxes and we were evaluating different sub-pieces of the image, detecting common words. What are the objects in the scene? Those are the nouns. What are they doing? Those are verbs like flying or looking.

"Then there are the relationships like next to and on top of, and the attributes of the objects, adjectives like red or purple or beautiful. The natural next step after whole image recognition is to put together multiple objects in a scene and try to come up with a coherent explanation. It's very interesting that you can look in the image and detect verbs and adjectives."

Mary (Twitter, Google+, website) started her career at Future Publishing, saw the AOL meltdown first hand the first time around when she ran the AOL UK computing channel, and she's been a freelance tech writer for over a decade. She's used every version of Windows and Office released, and every smartphone too, but she's still looking for the perfect tablet. Yes, she really does have USB earrings.

'A game of chicken': Samsung set to launch new storage chip that could make 100TB SSDs mainstream — 430-layer NAND will leapfrog competition as race for NAND supremacy heats up

Intel quietly launched mysterious new AI CPU that promises to bring deep learning inference and computing to the edge — but you won't be able to plug them in a motherboard anytime soon

Most Popular