Turn your Linux PC into a gaming machine

Linux-based PCs aren't second-tier machines any longer

There was a time when CPU performance came down to one thing: clock speed. A faster CPU could perform more operations in a given amount of time, and could therefore complete a given task before a slower CPU.

Clock speed is measured in hertz, which is the number of instructions that can be completed in a second (OK, we're simplifying a bit here - some instructions take more than one clock cycle).

Most modern processors run at around a few gigahertz (1GHz = 1,000,000,000hz). What constitutes an instruction depends on the type of processor. We'll be looking at the x86 processor family, which is used in most desktops and laptops.

This instruction set started in 1978 on the 16-bit Intel 8086 chip. the main instructions have stayed the same, and new ones have been added as new features have become necessary. the aRM family of processors (used in most mobile devices) uses a different instruction set, and will have a different performance for the same clock speed.

As well as the number of operations, different processors perform the operations on different amounts of data. Most modern CPUs are either 32- or 64-bit - this is the number of bits of data used in each instruction.

So, 64-bit should be twice as fast as 32-bit? Well, no. It depends on how much you need - if you're performing an operation on a 20-bit number, it will run at the same speed on 64- and 32-bit machines.

This word length can also affect how the CPU addresses the RAM. One of the biggest aspects of CPU performance is the number of cores. In effect, each core is a processor in its own right that can run software with minimal interference with the other cores.

Get daily insight, inspiration and deals in your inbox

Get the hottest deals available in your inbox plus news, reviews, opinion, analysis and more from the TechRadar team.

Threadbare

As with the word length, the number of cores can't simply be multiplied by the clock speed to determine the power of the CPU.

A task can take advantage of multiple CPU cores only if it has been multi-threaded. This means that the developer has divided the program up into different sub-programs, each of which can run on a different core.

Not all tasks can be split up in this way, though. Running a single-threaded program on a multi-core CPU will not be any faster than running it on a single core. However, you will be able to run two single-threaded programs on a multi-core CPU faster than the two would run on a single core.

We tend to think of memory as something a computer has a single lump of, and divides up among the running programs. But it's more nuanced than this.

Rather than being a single thing, it's a hierarchy of different levels. Typically, the faster the memory the more expensive it is, so most computers have a small amount of very fast memory, called cache, a much larger amount of RAM, and some swap that is on the hard drive and functions as a sort of memory overflow.

When it comes to CPUs, it's the cache that's most important, since this is on the chip. While you can add more RAM and adjust the amount of swap, the cache is fixed. Cache is itself split into levels, with the lower ones being smaller and faster than higher ones.

Configuration

So, in light of all this, it can be difficult to know how different configurations will perform in different situations, and when you throw in an operating system that doesn't always have the most reliable driver sets, things can get even more confusing.

We've taken a triumverate of different processors to see how they cope with the vagaries of Linux:

- AMD APU A8-3850 3.0GHz (cores: 4, cache: 4 x 1MB level 2) £79

- AMD Phenom II X6 1100T 3300Mhz (cores: 6, cache: 6x 512KB level 2, 6MB level 3) £100

- Intel i5-2500K 3.6GHz (cores: 4, cache: 2x 32KB level 1, 256KB level 2, 6MB level 3) £162

We're running all of them at their native clock speeds. You can always overclock later through the Bios, but for now we need to make sure the setup is as stable as we can get. Later on, when you're full of Linux love but craving a little more speed, you can dive into the Bios and start messing around with clock speeds and multipliers as much as you like.

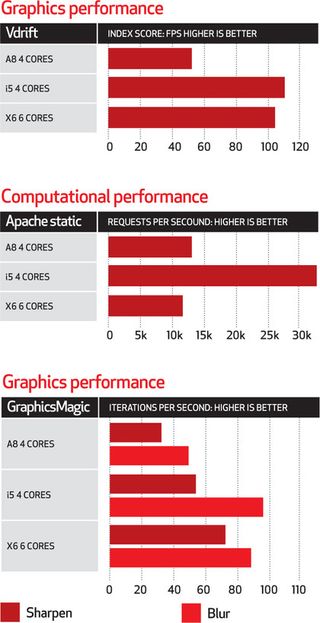

We can see that the Intel processor out-performed the AMD ones in almost every area. This isn't surprising, as it costs twice as much as the cheapest one, but also shows Intel's Linux drivers are strong enough to keep the same large gap it manages with its processing tech through Windows.

In a few areas - the Apache static page test for example - it performed twice as well. The Sandy Bridge CPU almost always outperformed the Phenom II X6 despite having two fewer cores and only slightly faster clock speed.

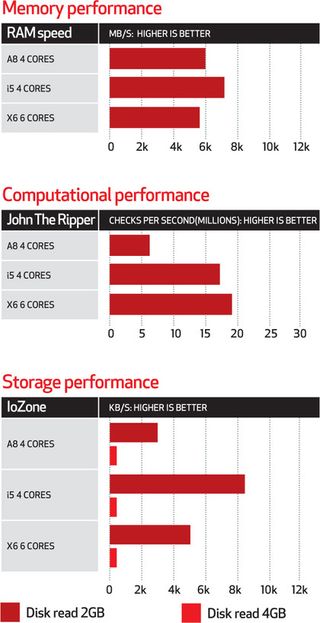

The only significant exceptions to this were the John the Ripper password cracking test and some of the GraphicsMagic tests. These are highly parallel benchmarks, which take full advantage of the higher thread-count in the Phenom II X6.

Not all of the speed differences here are down to the CPU though. The different boards have different hardware on them, despite both running similar technology. The differences in the way the storage drives operated were pronounced.

This resulted in dramatically faster read speeds for files under 2GB, though there was no difference in files above this size. Write speeds were roughly even across the different setups too.

The choice of CPUs available today is probably more complex than it has ever been. There has been growth in simpler, low-power CPUs, complex processors, highly parallelised graphics chips and clusters.

More than ever, the question isn't "Which is the best processor?", but "What is the right solution for the task?" Answering this question requires knowledge of both what chips are on the market, what they cost and how these chips perform at different tasks.

The high-end Intel cores are the most powerful for everyday tasks, but speed comes at a price. The extra cores in the X6 proved enough to match, and sometimes outperform the i5 in the GraphicsMagic benchmarks, which simulate image manipulation, while leaving a significant chunk of cash in your wallet.

Unless you use KDE with every widget and effect though, the X4 is more than capable of performing most day-to-day computing tasks.

Benchmarks

Most Popular