Why the death of Moore's Law wouldn't be such a bad thing

Chip features can't keep getting smaller, but that doesn't mean an end to progress

Moore's Law is dead. Long live Moore's Law.

Rinse and repeat using the latest techno-detergents including extreme UV lithography and the cycle of impending doom followed rebirth looks like going on forever.

Except it can't go on forever. So what happens when it stops? Actually, it doesn't mean an end to the progress of computing. Not immediately, anyway. In fact, it could help focus attention on areas of computing performance that currently tend to be ignored.

Sorry, what's Moore's Law again?

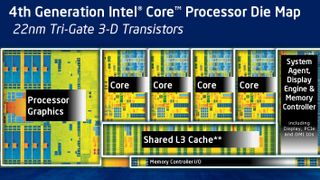

In the extremely unlikely event that you're unaware of what Moore's Law is, well, it's simply the observation that transistor densities in integrated circuits double every two years.

Put another way, Moore's Law essentially says computer chips either double in complexity or halve in cost – or some mix of the two depending on what you're after - every couple of years.

Anyway, I've been at this technology journalism lark for almost exactly a decade. And if there's a single theme that's sums it all up it's Moore's law.

It seemingly underpins the progress of computing of all kinds and constantly looks under threat of coming to an end. It's the opposite of nuclear fusion or a cure for cancer. Both of those seem to remain 30-odd years away but never arrive. Moore's Law looks constantly doomed to end but never actually dies.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

When the music stops

Of course, one day Moore's Law will surely will grind to a halt. There are lots of reasons why it might happen and some why it must happen. And not all of them technological.

Air travel is a handy proxy here. 40 years ago it seemed inevitable that supersonic flight would become commonplace. Today, jet liners are generally little faster than most of those in the 70s and notably much slower than one from that era, Concorde.

For air travel, then, costs and practicalities have prevented progress, not the laws of physics or the limits of scientific endeavour. We can do supersonic transport, but we choose not to.

The same thing may happen with computer chips. As transistors shrink, the new technologies required to keep the show going are getting prohibitively expensive. So, we may choose not to pursue them.

You cannae break the laws of physics

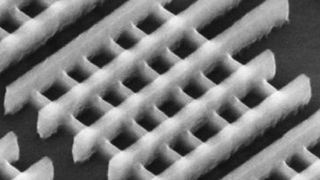

Then there's the aforementioned laws of physics. In very simple terms, you can't make devices that are smaller than their component parts, those component parts being atoms. So, that's a physical limit.

There are plenty of other physical limits before you even get down to the singular atomic scale, but you get the point.

Yes, you can shake things up with new paradigms like quantum computing. But even if that turns out to be practical, it's more of a one-time deal or a big bang event than a process of refinement that will keep things going for decades as per Moore's Law.

The question is, then, what happens when Moore's music stops? Well, in the medium term, an end to Moore's Law might actually invigorate the computing industry.

Fast chips make for lazy coders

That's because the fact of ever faster chips allows coders and programmers to be lazy. Perhaps 'lazy' is unfair. But the point is that with ever more performance on offer, there's often little to no pressure on coding efficiency much of the time.

Take that hardware-provided performance progress away and suddenly there'd be every reason to make the code more efficient. Indeed, competitions to run advanced code like graphics on seriously old chips happen for fun. What can be achieved is sometimes staggering.

So, there's almost definitely the equivalent of quite a few Moore's Law cycles on offer courtesy of more efficient code.

That should apply to power efficiency as much as it does performance. And power efficiency is beginnging to take over from pure performance as the most critical metric for consumer computing devices.

Better use of what we've already got

Similarly, existing transistor budgets could be used more intelligently and more efficiently to produce more performance or use less power courtesy of improved chip design. Again, the promise of ever more transistors doesn't exactly encourage a thrifty attitude to their usage.

All of which means that for the foreseeable future, an end to Moore's Law probably wouldn't make much difference to the end-user's experience of ever-improving computing.

That said, Moore's Law does actually look good for at least the next decade of process shrinks – and that's before you even take into account possible major shake ups like optical or quantum computing or chips based on graphene rather than traditional semi conductors.

As it stands, then, there are problems if you look beyond ten years. But it was ever thus. And like I said, if we hit the wall in terms of the physical size of chip components, there are plenty of other opportunities.

- Now why not read Moore's Law is safe for another decade

Technology and cars. Increasingly the twain shall meet. Which is handy, because Jeremy (Twitter) is addicted to both. Long-time tech journalist, former editor of iCar magazine and incumbent car guru for T3 magazine, Jeremy reckons in-car technology is about to go thermonuclear. No, not exploding cars. That would be silly. And dangerous. But rather an explosive period of unprecedented innovation. Enjoy the ride.