Google made its AI smarter by building its own custom chips

Google has been getting smarter, faster

Google believes artificial intelligence (AI) is the future, so much so that it started making its own custom processors to allow its algorithms to learn faster.

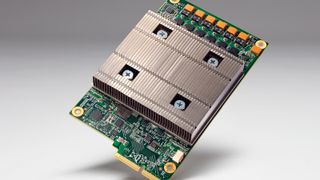

Google calls these chips Tensor Processing Units (TPU), which are basically microprocessors that are specifically tailored for machine learning. The TPU is small enough to only take up the space of one hard drive in Google's data center racks.

Since machine learning requires less computational precision, Google is able to squeeze more operations per second out of a chip. Typical processors run with 32 or 64-bits of precision, whereas Google's TPU operates at 8-bits.

Google estimates its TPU chips allowed it to "fast-forward" technology about seven years over using off-the-shelf processors.

So what does this all mean for you? Google apps and services like Street View and Google Now have been running on the TPU chips for a year, though the TPUs were in development for several years prior. The chips even allowed Google's AI to beat a Go world champion.

Google launched its TPU initiative in secret several years ago, and only announced it today at Google I/O 2016. The company hopes developers will use machine learning to create better apps for its platforms like Android.

- Everything you need to know about Android N

Get daily insight, inspiration and deals in your inbox

Get the hottest deals available in your inbox plus news, reviews, opinion, analysis and more from the TechRadar team.

Most Popular