The world’s largest chip is creating AI networks larger than the human brain

‘Extreme-scale AI’ is here

Cerebras Systems, maker of the world’s largest chip, has lifted the lid on new architecture capable of supporting AI models that outscale the human brain.

The current largest AI models (such as Switch Transformer from Google) are built on circa 1 trillion parameters, which Cerebras suggests can be compared loosely to synapses in the brain, of which there are 100 trillion.

By harnessing a combination of technologies (and with the assistance of Wafer-Scale Engine 2 (WSE-2), the world’s largest chip), Cerebras has now created a single system capable of supporting AI models with more than 120 trillion parameters.

- We've built a list of the best workstations right now

- Here's our list of the best dedicated server hosting

- Check out our list of the best mobile workstations out there

Brain-scale artificial intelligence

Currently, the largest AI models are powered by clusters of GPUs, which although suited well enough to AI workloads, are designed predominantly for the demands of video games. Cerebras has opted for a different approach, developing massive individual chips designed specifically for AI.

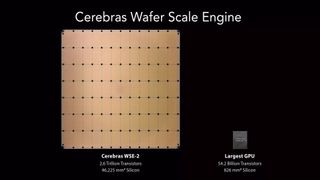

A single WSE-2 chip is the size of a wafer, 21cm wide, and is packed with 2.6 trillion transistors and 850,000 AI-optimized cores. For comparison, the largest GPU is under 3cm across and has only 54 billion transistors and 123x fewer cores.

By chaining together many CS-2 systems (machines powered by WSE-2) using the company’s latest interconnect technology, Cerebras can support even larger AI networks, many times the scale of the brain.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

"Today, Cerebras moved the industry forward by increasing the size of the largest networks possible by 100 times,” said Andrew Feldman, Cerebras CEO. “The industry is moving past 1 trillion parameter models, and we are extending that boundary by two orders of magnitude, enabling brain-scale neural networks with 120 trillion parameters.”

Rick Stevens, Associate Director at Argonne National Laboratory, expanded on the relationship between performance and parameter volume, and the opportunities the new Cerebras system may unlock.

“The last several years have shown us that, for natural language processing (NLP) models, insights scale directly with parameters - the more parameters, the better the results,” he said.

“Cerebras’ inventions, which will provide a 100x increase in parameter capacity, may have the potential to transform the industry. For the first time, we will be able to explore brain-sized models, opening up vast new avenues of research and insight."

New technologies

Although WSE-2 plays a fundamental role, the new CS-2 cluster system would not have been possible without a handful of new supplementary technologies: Weight Streaming, Memory X, SwarmX and Selectable Sparsity.

Weight Streaming is described as a software execution architecture and effectively increases the potential processing power of WSE-2 chips by disaggregating memory and compute. It offers the ability to store parameters off-chip, freeing up WSE-2 capacity without incurring the kinds of latency and bandwidth issues that usually afflict cluster systems.

These parameters are stored in an external MemoryX cabinet, which expands the 40GB on-chip SRAM memory with up to 2.4PB additional memory that - thanks to new SwarmX interconnect fabric - behaves as if it were on-chip.

According to Cerebras, SwarmX allows clusters of CS-2s to achieve almost linear performance scaling (i.e. a group of 10 machines will deliver 10x greater performance than a single CS-2) and can be used to connect up to 192 systems, making possible clusters of as many as 163 million AI-optimized cores.

The final piece of the puzzle is Selectable Sparsity, a new type of algorithm said to help CS-2 generate answers more quickly by cutting the amount of computational work required to reach solutions.

“This combination of technologies will allow users to unlock brain-scale neural networks and distribute work over enormous clusters of AI-optimized cores with push-button ease. With this, Cerebras sets a new benchmark in model size, compute cluster horsepower and programming simplicity,” said Cerebras.

While the pool of organizations currently capable of utilizing brain-scale AI systems is limited, Cerebras has laid the groundwork for a monumental leap in the scope and potential of AI models that could unlock opportunities beyond the realms of current imagination.

- Here's our list of the best cloud computing services

Joel Khalili is the News and Features Editor at TechRadar Pro, covering cybersecurity, data privacy, cloud, AI, blockchain, internet infrastructure, 5G, data storage and computing. He's responsible for curating our news content, as well as commissioning and producing features on the technologies that are transforming the way the world does business.

Most Popular