More than chess players

"The artificial intelligence community was so impressed with the really cool algorithms they were able to come up with and these toy prototypes in the early days," explains Ferrucci.

"They were very inspiring, innovative and extremely suggestive. However, the reality of the engineering requirements and what it really takes to make this work was much harder than anybody expected."

The word 'toy' is the key one here. Ferrucci refers to a paper from 1970 called 'Reviewing the State of the Art in Automatic Questioning and Answering', which concluded that "all the systems at the time were toy systems. The algorithms were novel and interesting, but from a practical perspective they were ultimately unusable."

For example, by the 1970s computers could play chess reasonably well, which rapidly led to false expectations about AI in general. "We think of a great chess player as being really smart," says Ferrucci. "So, we then say that we have an artificially intelligent program if it can play chess."

However, Ferrucci also points out that a human characteristic that marks us out as intelligent beings is our ability to communicate using language. "Humans are so incredibly good at using context and cancelling out noise that's irrelevant and being able to really understand speech," says Ferrucci, "but just because you can speak effectively and communicate doesn't make you a super-genius."

IBM's Deep Blue computer might have beaten chess champion Garry Kasparov back in 1997, but even now computers struggle to communicate with a human through natural language.

Get daily insight, inspiration and deals in your inbox

Get the hottest deals available in your inbox plus news, reviews, opinion, analysis and more from the TechRadar team.

Thinking robots

Language isn't everything when it comes to AI, though. Earlier this year, Ross King's department at Aberystwyth University demonstrated an incredible robotic machine called Adam that could make scientific discoveries by itself.

"Adam can represent science in logic," explains King, "and it can infer new hypotheses about what can possibly be true in this area of science. It uses a technique called abduction, which is like deduction in reverse. It's the type of inference that Sherlock Holmes uses when he solves problems – he thinks [about] what could possibly be true to explain the murder, and once he's inferred that then he can deduce certain things from what he's observed.

ALMOST AUTONOMOUS: Ross King's Adam machine can make scientific discoveries on its own

"Adam can then abduce hypotheses, and infer what would be efficient experiments to discriminate between different hypotheses, and whether there's evidence for them," King expands. "Then it can actually do the experiments using laboratory automation, and that's where the robots come in. It can not only work out what experiment to do; it can actually do the experiment, and it can look at the results and decide whether the evidence is consistent with the hypotheses or not."

Adam has already successfully performed experiments on yeast, in which it discovered the purpose of 12 different genes. The full details can be found in a paper called 'The Automation of Science' in the journal Science.

King's team are now working on a new robot called Eve that can do similar tasks in the field of drug research.

Understanding language

Adam is an incredible achievement, but as King says, "the really hard problems you see are to do with humans interacting. One of the advantages with science as a domain is that you don't have to worry about that. If you do an experiment, it doesn't try to trick you on purpose."

Getting a computer to communicate with a human is a definite struggle, but it's a field that's progressing. As a case in point, the chatbot Jabberwacky gets better at communicating every day. I log into it, and it asks if I like Star Wars. I tell it that I do, and ask the same question back. Jabberwacky tells me that it does like Star Wars. "Why?" I ask.

"It's a beautiful exploration, especially for the mainstream, of dominance and submission," it says. I think I smell a rat, and I ask Jabberwacky's creator Rollo Carpenter what's going on. "None of the answers are programmed," claims Carpenter. "They're all learned."

Jabberwacky thrives on constant input from users, which it can then analyse and store in its extensive database. "The first thing the AI said was what I had just said to it," explains Carpenter, but 12 years later it now has over 19 million entries in its database.

With more input, Jabberwacky can use machine learning to discover more places where certain sentences are appropriate. Its opinion on Star Wars was a response from a previous user that it quoted verbatim at the appropriate time.

The smart part here isn't what it says, but understanding the context. However, Carpenter is confident that it will soon evolve beyond regurgitating verbatim sentences.

"The generation of all sentences will come quite soon," says Carpenter. "It's already in use in our commercial AI scripting tools, and will be applied to the learning AI."

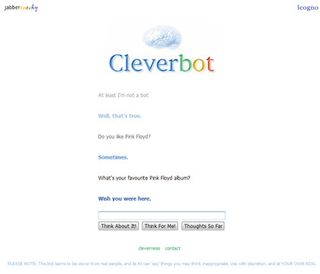

Carpenter's latest project is Cleverbot, which uses a slightly different technique for understanding language.

HIT AND MISS: Cleverbot sometimes says inappropriate things, but on occasions it's indistinguishable from a human

"Jabberwacky uses search techniques," explains Carpenter, "whittling down selections ever-smaller for numerous and ever-more contextual reasons until a final decision is made. Cleverbot uses fuzzy string comparison techniques to look into what's been said and their contexts in more depth. When responses appear planned or intelligent, it's always because of these universal contextual techniques, rather than programmed planning or logic."

So convincing is Cleverbot that Carpenter regularly gets emails from people thinking that the chatbot is occasionally switched with a real person. Cleverbot's answers aren't always convincing, but Carpenter's techniques have managed to secure him the Loebner Prize for the 'most humanlike' AI in 2005 and 2006.

It's elementary

However, perhaps the biggest milestone when it comes to natural language is IBM's massive Watson project, which Ferrucci says uses "about 1,000 compute nodes, each of which has four cores".

The huge amount of parallelisation is needed because of the intensive searches Watson initiates to find its answers. Watson's knowledge comes from dictionaries, encyclopedias and books, but IBM wanted to shift the focus away from databases and towards processing natural language.

"The underlying technology is called Deep QA," explains Ferrucci. "You can do a grammatical parse of the question and try to identify the main verb and the auxiliary verbs. It then looks for an answer, so it does many searches."

Each search returns big lists of possibly relevant passages, documents and facts, each of which could have several possible answers to the question. This could mean that there are hundreds of potential answers to the question.

Watson then has to analyse them using statistical weights to work out which answer is most appropriate. "With each one of those answers, it searches for additional evidence from existing structured or unstructured sources that would support or refute those answers, and the context," says Ferrucci.

Once it has its answer, Watson speaks it back to you with a form of voice synthesis, putting together the various sounds of human speech (phonemes) to make the sound of the words that it's retrieved from its language documents. In order to succeed in the Jeopardy! challenge, Watson has to buzz in and speak its answer intelligibly before its human opponents.

Not only that, but it has to be completely confident in its answer – if it's not then it won't buzz in.

Watson doesn't always get it right, but it's close. On CNN, the computer was asked which desert covers 80 per cent of Algeria. Watson replied "What is Sahara?" The correct answer is in there, and intelligible, but it was inappropriately phrased.

The future

As you can see, we're still a long way from creating HAL, or even passing the Turing Test, but the experts are still confident that this will happen. Ross King says that this is 50 years away, but David Ferrucci says that 50 years would be his "most pessimistic" guess.

His optimistic guess is 10 years, but he adds that "we don't want a repeat of when AI set all the wrong expectations. We want to be cautious, but we also want to be hopeful, because if the community worked together it could surprise itself with some really interesting things."

The AI community is currently divided into specialist fields, but Ferrucci is confident that if everyone worked together, a realistic AI that could pass the Turing Test would certainly arrive much quicker.

"We need to work together, and hammer out a general-purpose architecture that solves a broad class of problems," says Ferrucci. "That's hard to do. It requires many people to collaborate, and one of the most difficult things to do is to get people to decide on a single architecture, but you have to because that's the only way you're going to advance things."

The question is whether that's a worthwhile project, given everybody's individual goals, but Ferrucci thinks that's our best shot. Either way, although the timing of the early visionaries' predictions was off by a fair way, the AI community still looks set to meet those predictions later this century.